Nate Silver on AI, Politics, and Power

writes Silver Bulletin and is the author of On the Edge: The Art of Risking Everything, now in paperback with a new foreword.

In today’s conversation, we discuss…

Honesty, reputation, and paying the bills with writing,

Impact scenarios for the AI future, including how AI could impact elections and political decision-making,

The emerging synergy between prediction markets and journalism, and how Nate would build a team of professional Polymarket traders,

How to build a legacy,

Nate’s plan to reform US institutions and how that compares with real-world prospects for creating political change over the long term.

Listen now on your favorite podcast app.

Mathematicizing Persuasion

Jordan Schneider: Before we get going, I just want to say thanks, Nate. Writing on the Internet is scary, and you’ve made it less scary. Getting to the point where I can just say things that I know are going to piss off administration officials and $4 trillion companies takes a lot. Watching you do that over the years has been a good lodestar for not caring what powerful and rich people think and working toward the truth.

Nate Silver: I appreciate it, Jordan. There’s an equilibrium where people are way too short-term focused and too susceptible to peer pressure.

Conversely, once you develop a reputation for doing your reporting and speaking from a place of knowledge and experience — without trying to sanitize things too much — you develop trust with your audience. You carve out more of a distinct niche. People are too afraid of honesty and differentiation.

It’s easy to say if you cover fields that are popular and get a lot of audience and traffic, whether that’s electoral politics or sports. I’m not doing investigative reporting here, but I do think that working hard and being the best version of yourself — and being an honest version of yourself — is usually a smart strategy in the long run.

Jordan Schneider: That’s even more difficult with policy writing. ChinaTalk is closer to a think tank than it is to journalism. The vast majority of people who work in this field can’t make public comments because they either work in the government or work in government relations for a big company. Even if you’re at a think tank, you have to pay the bills somehow, and that basically means getting corporate sponsorship for your work.

Nate Silver: As a consumer, there are lots of issues — including China — where I’m not quite sure who to believe or trust. China is among many issues where I feel I’d have to invest a lot of time investigating who I can trust. At that point, you could almost write about it yourself. There are a lot of issues where there’s no kind of trustworthy authority. Kowtowing to corporate power is part of it. That’s part of the beauty of having a Substack model with no advertising.

Paid subscriptions help support ChinaTalk’s mission. Consider supporting us if you can!

But especially in diplomatic and international relations, people are always calibrating what they say. There are smart commentators, but you have to read between the lines — sway the reads 20 degrees left or right.

Jordan Schneider: There’s this recent micro-scandal. Robert O’Brien, former national security advisor, wrote an op-ed saying we should sell lots of Nvidia chips to China. It comes out three days later that Nvidia is a client of his.

Nate Silver: I’m never quite sure whether to assess these arguments tabula rasa and ignore who’s making the argument, versus considering that people have long-term credibility and reputational issues. One thing I do is play poker, and in poker, the same action from a different player can mean massively different things. A lot of stuff is subtextual. A lot of stuff is deliberately ambiguous.

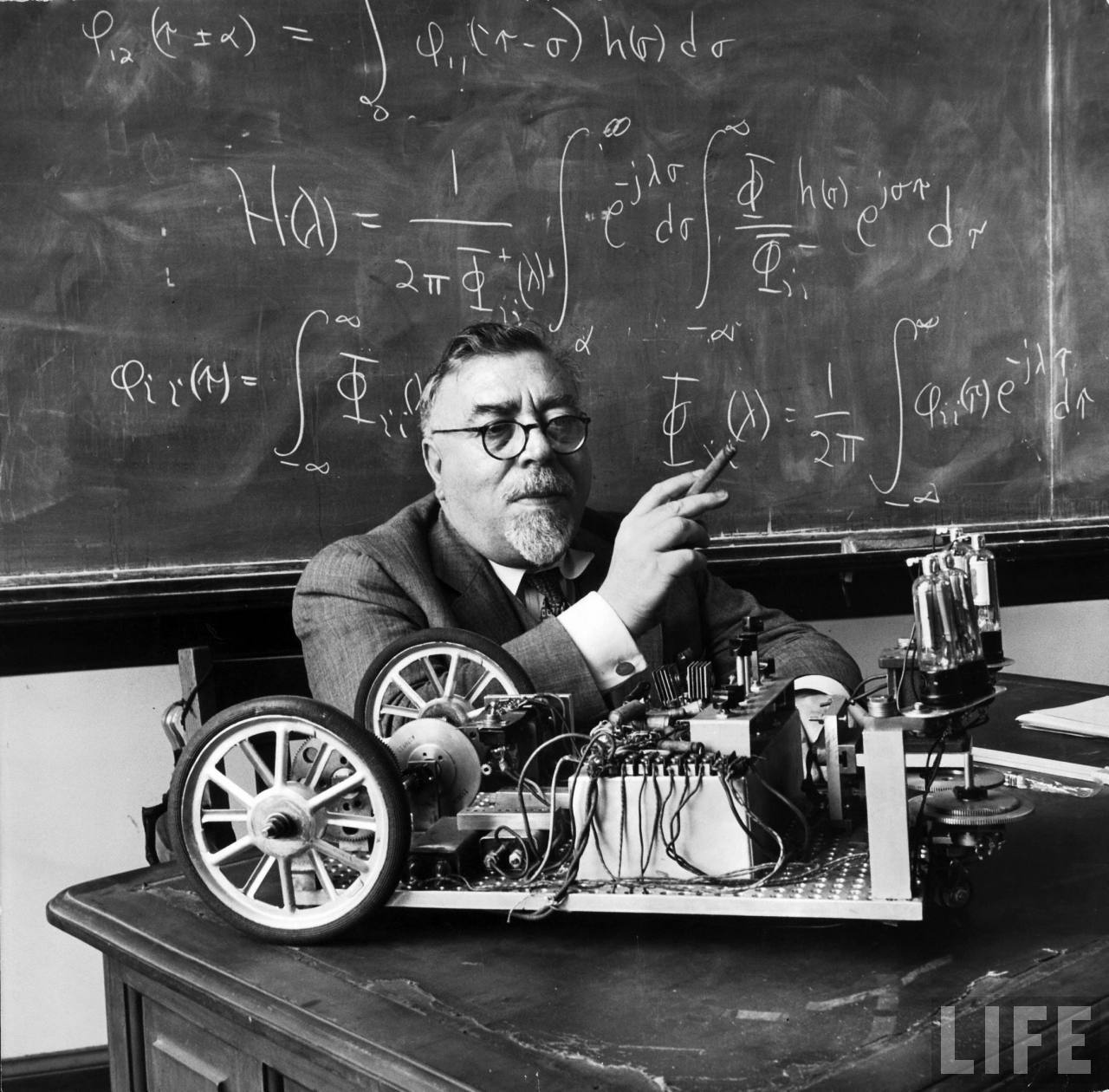

One reason why I think large language models are interesting is because they understand that you can mathematicize language. In some ways, language is a game in the sense of game theory — it’s strategic. What we say, exactly how we say it, and what’s left unsaid is often powerful. A single word choice can matter a lot.

You probably think about this in a different context — in the case of official statements by the Chinese government, for example.

Jordan Schneider: Let’s stay on this, because this was actually one of my mega brain takes that I want your response to. We have Nate Silver at 11 years old wanting to be president, and now we’ve had 20 years of him thinking about analyzing presidents and presidential candidates and what they do. You don’t necessarily have to believe in AGI and fast takeoff to think that 10, 20, 30 years down the road, a lot of the decisions that presidents and executives make today — an AI will just strictly dominate what the human would do.

Maybe that Cerberus moment becomes what the Swedish Prime Minister was saying a few days ago — “Oh yeah, I ask ChatGPT all the time for advice.” I’m curious, what parts of the things that presidents and presidential candidates do you think are going to be automated the fastest, assuming we just let them ingest all of the data that a president or executive would be able to consume themselves?

Nate Silver: AI in its current form might be an improvement over a lot of our elected government officials, but that says a lot more about the officials than the AI necessarily.

I don’t take for granted — and some people do, including people who know a lot about the subject — that we’re going to achieve superhuman general intelligence. There are different meanings between these terms that we can parse if you want. But some of the reason that large language models are good now is because they train on human data and they get reinforcement learning with human feedback.

There are cases — pure math problems — where you can extrapolate out from the training set in a logical way. For more subjective things, political statements, I don’t know as much.

Some people believe that AIs could become super persuasive. I’m skeptical. First of all, humans will be skeptical of AI-generated output, although maybe I’m more skeptical than the average person might be. Also, it’s a dynamic equilibrium. You can message test, have an AI train, and it can figure out: “Okay, we can now predict — we’re running all these ads, sending out all these fundraising emails — we can predict which will get a higher response rate."

But when people start seeing the same email — “Nancy Pelosi says this or that” — 50 or 100 times, then they adjust and react and backlash to it. In domains where you are approaching some equilibrium, profit’s not easy to have. There are no easy tricks. You have to play a robust, smart strategy, and ultimately the strength of your hand matters, along with how precisely you’re constructing the mix of strategies that you take at any given time.

I’d bucket it roughly as a 25% chance that on relatively short timelines, AI just blows our socks off. 25% that it does that, but at a longer timeline — a decade, two decades, three decades out. Then 50% that AI is a very important technology — more important than the Internet or the automobile — and reshapes things, but does not fundamentally reshape human dynamics across a broad range of fields.

Things like international relations or politics are among the more resistant domains toward AI solutions. At the same time, another risk is that you’ll have people who view the AIs as oracular. We’ve seen cases of people who are encouraged by ChatGPT to think they’ve developed some new scientific theorem or discovered a new law of physics. They’re very smart at flattering you.

One thing I do is build models. Sometimes a bad model is worse than no model or your implicit mental model. Trusting an all-knowing and all-powerful algorithm, especially in cases where the situation is dynamic — the laws of mathematics don’t change, but international relations and politics are always dynamic and maybe changing faster — and whether the AIs can adapt to new situations quickly is also an open question.

Jordan Schneider: If we’re trying to bucket the types of things a CEO or a president or a senator does, we have personnel management — who am I going to hire, who am I going to fire? We have the outward-facing stuff — what do I say to this interviewer, how do I talk in the debate? Then we have these decision points where you have a memo, you could pick A, B, or C, and there are different sets of trade-offs where you could optimize for this thing or that thing.

I don’t think it’s crazy to think that parts of those different buckets could be radically improved, even just to play out the different second and third-order effects of whatever you’re negotiating for in the next budget bill or something.

Nate Silver: For discrete tasks, AI can already be wonderful. I’m doing a little coding now on a National Football League model, and it’s late at night. I’ve been up a long time, had some wine at dinner, and I’m thinking, “Okay, Claude, how do you do this thing in the language I’m programming in?” The thing is a discrete task where I have enough experience with these models to expect it to give a good answer, and I have enough domain knowledge that when I plug in this code, I can tell if it works or not. I’m not going to have some bad procedure that chains into other bad procedures in a complicated model.

There’s been mixed evidence on how much more productive AI makes people. My stylized impression is that it makes the best people even more productive and makes people who are not that smart maybe worse. I worry that it’ll be a substitute for domain knowledge and human experience, but for certain things it’s already superhuman, and for other things it’s dumb as rocks.

Knowing what is what — there’s a learning curve for that.

Jordan Schneider: There’s also this aspect where the human floor can be pretty low, especially if you’re tired or stressed or you’re the president and you’ve got a hundred thousand things being thrown in your face. It’s literally an impossible job. Maybe there’s also this weird electoral feedback thing where, presuming that AI is really helpful for winning and governance, the folks who trust it more and faster are the ones who perform better in their jobs and get elected to higher and higher office.

Nate Silver: Government is backward in a lot of ways. This varies country by country too. In some ways, America has maintained a relatively high degree of international hegemony despite having this constitutional system that’s now hundreds of years old. It has flaws that people — not to sound a little personal here — Trump has found a way to exploit.

It’s amazing that we still entrust all of this power in one president. New York City, with 8 million people, is probably about as large an entity as you should have maybe one person in charge of. But we haven’t really developed other systems.

If anything, one of the more dynamic places in the world right now — you’d still say the U.S., you’d say China, and then probably the Middle East — they’ve kind of cheated. The U.S. is increasingly less democratic, and the other two were not really democratic to begin with. Maybe my assumptions have been wrong.

Poker and Prediction Markets

Jordan Schneider: Let’s talk about prediction markets. You spent a lot of time thinking about poker and had this whole experiment in your book where you just spent a year betting on basketball. The thing about basketball and poker is there’s a lot of data and track record you can base your estimation on. You can find your edge in very weird corners. But a lot of these markets on Polymarket and Kalshi are very one-off. Right now there’s “Is Trump going to put more sanctions on Putin in the next six weeks?” Is there a regression you can run on that? Not really. It’s fascinating because these are so much more one-off and open-ended than what you would see in the stock market or sports betting.

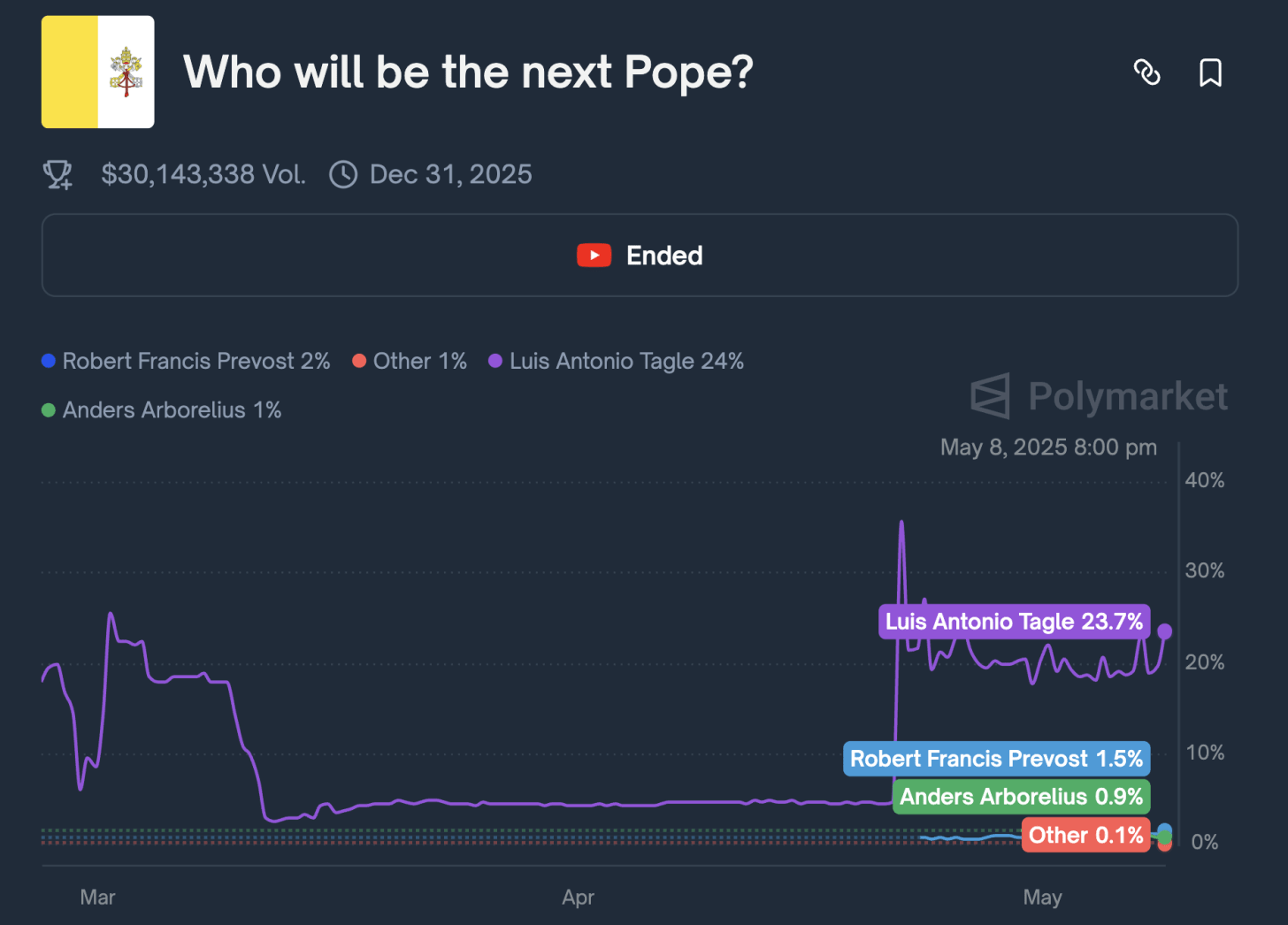

Nate Silver: I’m a consultant for Polymarket, so I do have a conflict of interest to disclose. Sometimes the one-off events are not as good. One market where Polymarket, Kalshi, and others did not do as well was the election of the new Pope. Cardinal Ratzinger had a very low chance of being selected.

What happens there? On one hand, you have a papal election every 10 or 20 years, so you don’t have a lot of data. Everybody leaks, but the papal conclave does not leak, apparently. There’s not really any inside information. Then people apply the heuristics they might apply to other things. When they have the smoke signals come out quickly, they think, “Oh, it must be the most obvious name.” The favorites went up, and that wasn’t true. People just had no idea what was going on. There are some limitations there versus things like elections, which are more regularizable.

At the same time, there is a skill in estimation that poker players and sports bettors have. Maybe I’m making a real-time bet on an NFL game and Patrick Mahomes gets injured — the quarterback of the Kansas City Chiefs. I have to estimate what effect this has on the probability of the Chiefs winning. If you just do that a whole bunch, then you get better at it. You have to have domain knowledge and be smart in different ways.

When I’ve consulted in the business world — not capital-C consulting, but for people who are actually making bets — you realize that a good answer quickly is often what makes you money, whereas a perfect answer slowly doesn’t. This is one reason we’re seeing a shift of power away from academia toward for-profit corporations. You can say that’s bad or good — I think we need both, frankly. But you have a profit motive and an incentive to answer a question quickly.

In poker, the same thing. If I play a hand and I’m getting two-to-one from the pot, so I need to have the best hand or make my draw one-third of the time. Then you go back and run the numbers through a computer solver, and you’re like, “Well, actually here I only had 31% equity when I needed 33%.” That was a big blunder. For most people, 31 versus 33 is the same, but with training you can estimate these things with uncanny precision. There’s a lot of implicit learning that goes on, and it becomes second nature.

Jordan Schneider: Are you worried about insider trading with all this political betting? There’s an aspect where these are all crypto — you get on these markets with crypto. There were markets asking which way Susan Collins is going to vote. The tail outcomes for a legislative assistant in her office are that you can make 10 times your salary in a minute. What’s your thinking on this?

Nate Silver: There are a couple of qualifications. First of all, people on the inside often aren’t as well-informed as they think, or there are downsides to having an inside view versus an outside view. You might drink the Kool-Aid, so to speak. You might be in a bubble.

Jordan Schneider: There are ones where you can literally — I mean, there have been lots of group chats of people talking about very sketchy trades and one-way bets being made in the stock market about what’s going to happen with a trade deal. You can literally be the person who decides and be betting on the side.

Nate Silver: If there are incentives to make money in a world of 8 billion people, many of whom are very competitive and most of whom have access to the Internet, people are going to find a way to do it. It’s not just that game theory equilibrium is a prediction of what occurs in the ideal world — it’s what very much does happen.

We’ve seen things in the crypto space like an increasing number of crypto kidnappings. That’s one of the consequences of people being worth vast amounts of wealth that isn’t very secure. It’s just going to happen until you up security or have better solutions.

I don’t think there’s necessarily any more or less insider trading on Polymarket than there might be for sports betting sites — we’ve seen a lot of sports betting scandals — or for regular equities. The literature says that members of Congress achieve abnormal returns from their stock portfolios. I’d have to double-check that — I’m sure there’s some debate about it.

People can also sometimes misread insider information or read a false signal that becomes insider information or a fake signal. If they see an unusual betting pattern, they’ll think, “Oh, okay.” There were some tennis betting scandals where tennis is an easy sport to throw because it’s individual — two people. You don’t need multiple conspirators. Something unusual happens and people think, “Therefore, it must be an insider trading move” or “Therefore, it must be someone throwing the match.” Maybe sometimes it is, other times it isn’t. It’s very hard a priori to know which is which.

Jordan Schneider: It’s a new variable in politics. The way you could previously cash out was becoming a spy for another country — very high risk with lots of downside. Or you’d have your career and then become a lobbyist, but that pays out over years.

This is something new, and we’re going to have to watch it because I find I get a lot of value from seeing these numbers every day and watching how they change. Polymarket has become something I check before looking at the homepages of major news outlets. But there’s something that makes me a little queasy about opening up this new realm of betting where maybe we, as citizens, don’t want the people we’re paying to do these jobs to have this alternate way to cash in.

Nate Silver: Or journalists too. I know a couple of projects where people are basically trying to apply journalistic skills to make trades — not necessarily in prediction markets, maybe a little bit of that, but more in equities.

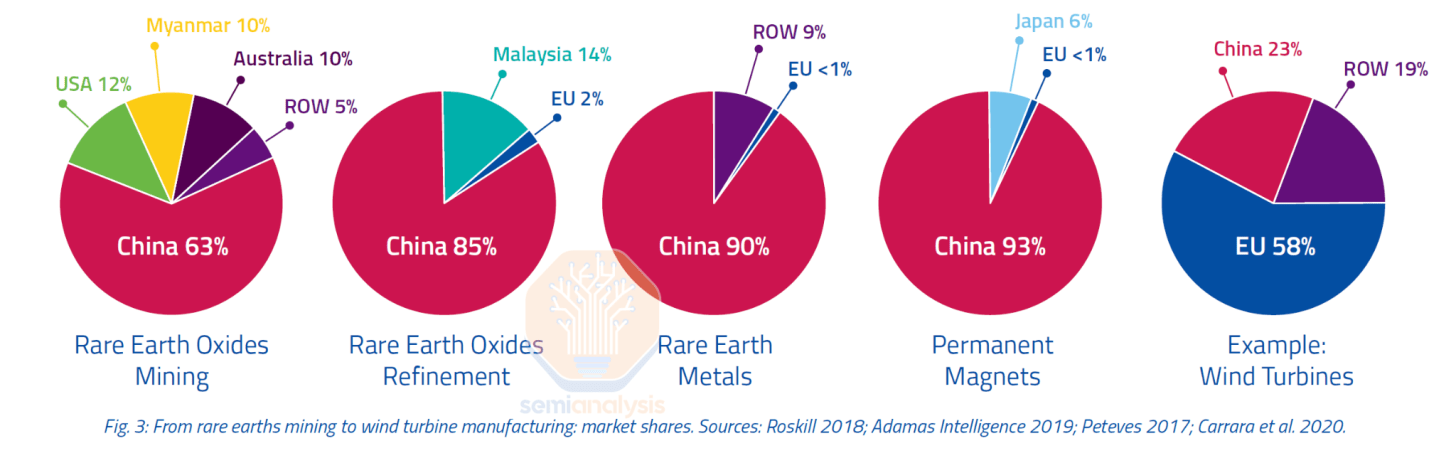

If you have a well-informed view of China, especially particular industries, that has big implications for how you might trade a variety of stocks, including American stocks.

My impression is that people at Wall Street firms trading equities don’t like all this macro risk. They prefer to predict what the Fed is going to do, analyze earnings reports, and work with long-term trends they can run regressions on.

They don’t like profound political uncertainty where the macro bets are very long-running. It may be hard to come up with the right proxy for the trade you want to make.

You’ll probably see more fusion between trading and research and journalism at every step along that path.

Jordan Schneider: I’ve started interviewing a lot of these Polymarket investors and traders. It’s remarkable to me that there are no funds or teams. I assume that’s just because these markets aren’t liquid enough. But what would your dream team of skill sets look like if you were going to start the Polymarket hedge fund?

Nate Silver: You probably want some AI experts. You want macro people, you want a mix of people who are smart micro traders — poker player estimator types. More barbell theory. You want the macro people — a China expert, an AI expert, an expert on American politics. On average, the takes that Wall Street has about American politics are pretty primitive. You want someone who understands macroeconomics and inflation and the debt. You want a mix of skill sets.

In terms of what the banks and hedge funds are doing, different firms probably differ in what they think. There is reputational or enterprise risk to trading. Crypto can be a gray area. Prediction markets can be a gray area. I suspect there’s probably more of it than you might assume.

Until the last couple of years, there definitely wasn’t enough money in prediction markets overall to be worth it for institutional traders. Now there might be, especially for smaller firms. If you were a firm that wanted to say, “We are primarily trading non-traditional assets — prediction markets, cryptocurrencies, maybe low market cap stuff” — there are crypto hedge funds. If they’re getting more into prediction market stuff too, it wouldn’t surprise me in the least.

As prediction markets get bigger, then the bigger quant hedge funds and eventually Morgan Stanley and Goldman Sachs will want to trade them too.

The Barbell Strategy

Jordan Schneider: What do you think your legacy is now, and how would you want to change that in the next 10 to 20 years?

Nate Silver: The best work I’ve done is the book I wrote a year ago, On the Edge, now in paperback. The election models are valuable and might be what I’m best known for, even though it feels like they’ve been 5 or 10% of my lifetime productive work. That’s a really hard question to answer — I don’t think I’m quite old enough to answer it yet.

Jordan Schneider: I can answer it maybe. There weren’t a lot of numbers in many discussions before you. With elections, people have to respond to facts. The facts of you boiling all the polls and demographic data and voter registration data into one thing is a much more tangible, grounded set of facts than “you say Marist, I say Quinnipiac” — what does that even mean?

This approach is spreading now into wider areas on Polymarket and Kalshi, where different modes of politics now have numbers attached to them in ways they didn’t before. There’s literally a “Will China invade Taiwan?” market. However much we want to believe anyone has insight into that, there was no number you could point to before to ground you in that reality.

Great man versus trends — there’s been a lot more data and computing power over the past 25 years to enable what you do. But it was both the modeling and presenting it in a compelling, engaging voice that really helped reshape the way people think about these issues.

Nate Silver: I appreciate that I have certain talents. If you’re a 7 out of 10 on the modeling and a 7 out of 10 or maybe 8 out of 10 on the presentation, that overlap is somewhat rare. The overlap is maybe more than the sum of the parts — more valuable than being merely pretty good at each individually. I’m a good modeler, but the combination of those skills is valuable.

The world is moving directionally more toward this. Prediction markets in particular — if you look at sports betting, it’s not really growing as much as the big industry players had hoped, but prediction markets have had some false starts before. Now with Polymarket, Kalshi, Manifold, and others, you have a robust and well-constructed enough trading ecosystem where they are here to stay for many things.

If I’m about to publish a story — let’s say on Trump’s latest round of tariffs — and I need to check if Trump has done anything crazy in the past five minutes since I last read the internet, I’ll just go to Polymarket. Did any markets radically change? Is there some massive event that would make it insensitive to publish a story? Has there been an earthquake somewhere? You can instantly see that news. Twitter used to be somewhat similar for instant feedback.

But this leads to a gamified ecosystem where, as a poker player, sports bettor, or rapid news consumer, you’re always checking your email, phone, Twitter, and the internet. You’re always aware of 15 things at once. It makes it harder to unplug and creates fuzzy boundaries between work life and real life. If I’m running on the east side listening to a podcast and thinking about my next article, that’s work. If I’m checking my phone late at night when I’m out at dinner — checking work stuff, not gossip — that’s work too.

The world’s moving more that way, for better or for worse, and it prioritizes quick computation and estimation.

Jordan Schneider: Does this make it harder to do big things anymore?

Nate Silver: No, it creates more of a barbell-shaped distribution. Working on the book took me three years — that was really important fundamental work. Right now I’m working on this National Football League model. That’s a six-week project, not a three-year project, but it’s foundational work that will produce dozens of blog posts and hopefully hundreds or thousands of subscribers for Silver Bulletin for many years.

Having three or four things that you’re really interested in and fully invested in — obsessions, you might call them — is very valuable. But the skill of quick reaction is also important: your best flash five-second estimate, having the first authoritative take on Substack when Jamaal Bowman won the primary by a larger margin than predicted.

I was in Las Vegas at the World Series of Poker. I had just busted out of a tournament and hadn’t seen any great takes on this yet — it was a very fruitful subject. It was midnight New York time, 9 PM Vegas time. I cranked through until 3 AM Vegas time, 6 AM New York time, and published what I thought was a pretty smart story on it. That kind of thing is important.

The middle ground — the ground occupied by magazines, for example (not that places like The Atlantic, which have become digital brands, don’t do great work) — is maybe the in-between where the turnaround is too slow to be the lead story in a rapidly moving news cycle, but it’s not quite foundational work either.

Academia suffers from even more of this problem. The turnaround time to publish a paper is just too slow.

I’ll run a couple of regressions, give it a good headline, make some pretty charts, and it’ll be 90% as good as the academic paper in terms of substantive work and 150% better written because I’m writing for a popular audience, not journal editors. That exchange of ideas is what moves the world.

It’s fascinating to see these dynamics. I don’t know a lot about DeepSeek, for example, but it was interesting to see the narrative shape in real time about how competitive China is in the short to medium term on large language models. Or to see with Jamaal winning the primary — probably the general election too — how much that anchors the conversation in different ways.

Stylized, abstracted, modelized versions of the real world become more dominant. We’re all model-building. I have a friend who’s a computational neuroscientist at the University of Chicago. He says ultimately the brain is a predictive mechanism. When you’re driving and looking at the road ahead, you’re not seeing a literal real-time version of the landscape in front of you. It’s a stylized version where your brain makes assumptions and fills in blanks because it’s more efficient processing. The message length can be shorter if you focus on the most important things.

You can notice this if you’re in any type of altered state — whether drug-induced, under anesthesia, tired, or experiencing extreme stress or stage fright. You process things differently at a broader level.

My first book, The Signal and the Noise, is about why the world is so bad at making predictions. Part of it is that you have to take shortcuts or else you’ll never get anything done. But when you take shortcuts, that leads to blind spots, and that’s not really solvable. AI might scrape off some of the rough edges, but sometimes the rough edges are created by the market being efficient and dynamic and by people keying off other people’s predictions and forming a rapidly shifting consensus. Those dynamics are going to remain very interesting.

Jordan Schneider: The DeepSeek experience was surreal for me because this is a story — China and AI — that I’ve been following for five to seven years. All of a sudden, it became the story. Our team hit it pretty well; we doubled our subscribers. But watching our little thing try to shape the broader narrative, and suddenly all these journalists are asking, “What’s this company?” I’m thinking, “I’ve been writing about it for two years. Where have you guys been?” I’ve never had one of my stories really become the main story before.

Nate Silver: It can be an amazing feeling. This relates to the Great Man theory in some way. Early in a news cycle, the way a story is covered is very important. One news outlet or journalist covering a story differently can shape attitudes about it for weeks to come. This is partly why PR people always say, “Don’t say so much, but be fast.” You want to preempt things because that founder effect can matter.

Nate Silver: Tell me, Jordan, what did the mainstream media — The New York Times, Wall Street Journal, and others — get wrong about the DeepSeek story?

Jordan Schneider: There was this first narrative that it cost them $6 million to train their model. That was illustrative of the problem. When I went on Hard Fork with Casey Newton, that’s the first question they asked me. I’d already written a few things, but no — it did not cost $6 million to make this model. You need to hire the people, run the compute, have the infrastructure, run lots of experiments. All in, it’s probably more like half a billion dollars.

But there were enough people for whom that narrative was interesting or financially useful that it spun faster than my loyal crowd of ChinaTalk listeners who were hearing us beat the other side of the drum.

Another thing that’s been shaped by this is the export control debate. There was this expectation that because DeepSeek exists, export controls are worthless. There’s lots more nuance to that, which we’ve covered in other podcasts. But the simplified version of drawing that line from A to B has really shaped the trajectory of American policy toward AI export controls and AI diffusion more broadly.

The cohort of folks who understood this nuance wasn’t able to seep into the halls of decision-making. We have now, quote-unquote, “lost” when it comes to semiconductor export controls, partially because there was this moment that ended up reshaping narratives, and the people who agreed with my version of the facts weren’t able to influence policy.

Nate Silver: These narratives that prevail are often in someone’s economic or political interest. There was a narrative after the 2024 election that Democrats lost because of low turnout, especially among younger voters. There’s a slight grain of truth in that.

However, this was exacerbated because people don’t realize it takes a month to count votes fully in the United States. The counts you see on election night and the next day shortchange turnout by tens of millions of votes. But that was a convenient narrative because people wanted to move to the left. It’s more that younger voters — particularly young men — shifted against Democrats. There’s somewhat lower turnout, but it’s still historically high.

It’s tricky when you have the more nuanced take versus the easily memeable take. If you’re making sports bets, a lot of it’s in the nuance. We all know this quarterback is good, but maybe he can be both very good and a little overrated by conventional wisdom for various reasons. There’s enough of a profit margin where you have positive expected value on your bet. In the news cycle, that’s less forgiving. But Substack and podcasts give a little more room for subtlety and exploring things.

Jordan Schneider: I’ve shied away from writing a book all these years. You’re very quick-twitch but have also done it and just made the case that it’s really valuable. Why don’t you expand on that for me? What has having these two book projects under your belt given you?

Nate Silver: For one thing, I like the process of writing a book. Ordinarily, day to day, I consider myself a journalist, but for the most part, the process involves me and a computer. Particularly when it comes to politics, I don’t really want to call Democratic or Republican sources and get their take. People are paying me for my take, and I don’t care to be spun. That increases the turnaround time.

For the book, it’s the opposite. On the Edge involved interviews with roughly 200 people — a lot of experts and practitioners in fields I find fascinating. Even if you weren’t working on a book, if you took two years to interview 200 really smart people about things they’re knowledgeable about just for your own edification, that would make you a lot smarter.

Jordan Schneider: When I was reading the book, I was annoyed. I wanted the Nate Silver experience — I want to listen to the hour-long conversation you had with Peter Thiel. That’d be fascinating if it’s on the record. Why not sequence it that way?

Nate Silver: The people I spoke with were often very candid, maybe against their narrow self-interest in some cases. But I would never approach somebody and say, “I want to talk to you about X.” I would approach them and say, “I’m writing a book about X that’ll be published in a year or two.” I would always be very honest about the rationale for the conversation.

If something Peter Thiel said was super newsworthy — and I’m trying to remember if that was a conversation with explicit ground rules — but if it’s a conversation where someone says something newsworthy in a non-book context, it’s a little ethically fraught. I don’t think it crosses some bright line journalistically, but it’s complicated.

With Sam Bankman-Fried, that was an explicit understanding. He definitely told me things because he thought the timeline would insulate him from some risk or he could shape the narrative somehow. It wound up coming out after he was already sentenced and in prison.

You’ll have reporters who embargo reporting on political projects — there should be lots of books about Biden’s decline. The fact is that people will tell you more when they have more protection. Probably 80% of my interviews were more or less fully on the record. Sometimes the background interviews use journalism’s distinctions for these terms, and there are in-between categories where you can publish with approval. I don’t love that, but I did it for one or two important sources where it was the least bad option.

People are more candid if they understand the context of your project and believe it’s coming out in the context of a book that puts everything into a broader universe.

I used to work at The New York Times — that ended in 2013, but now I write for them a few times a year. It’s friendly. If The Times calls me for a story, I’m still sometimes reluctant to say anything because you’re going to have one or two quotes put into their narrative that may or may not suit your purposes and may or may not be accurate.

The Times is popular in part because they write in narratives. Even a boring economic data story has a little spin on the ball — good writing, good headlines. Nothing wrong with those things, but sometimes there’s a narrative that’s a little reductionist. They’re the best in the business, or among the best. When you deal with people who don’t have those journalistic standards, you’ll encounter more problems potentially.

Jordan Schneider: We have these nice historical interludes in the book. Is that a type of non-journalism-driven writing? Is that something potentially on your horizon as well? How do you think about hanging out in the archives for a year or two?

Nate Silver: History and statistics are closely tied together. For this NFL project, I’m researching every NFL game played back to the 1920s. It’s remarkable to see how this one sport has survived with significant changes. But you come across something and wonder, “Why were there no games played that day?” Oh, the September 11th attacks. You encounter changes in real-world behavior and technological changes.

Any system model sometimes involves extrapolation from first principles, but the most empirical ones just say, “Okay, we are extrapolating from history and making the assumption” — and it is a big assumption — “that trends that existed in the past will correctly extrapolate to the future.” Often they don’t. Economic forecasting is notoriously difficult because there are regime changes. The economics of the internet era versus the pre-internet era versus the pre-automation era versus the pre-agricultural era — there’s great research showing these are all very different.

The field of economic history, sometimes called progress studies, is quite underrated. The notion of why different societies, great powers or not-so-great powers, rise and fall — why is South Korea as prosperous or more prosperous than Japan today per capita when there was a 10x or 20x gap 40 years ago? These seem like really high-stakes, important questions. Because they play out at longer time scales, they often don’t motivate people as much. But they seem vital.

Even within AI, from what I understand, the AI companies are not really putting a lot of effort into thinking about what this looks like in five or 10 years. They have longer time horizons than most, but they’re not really forecasting how the entire world changes if we do achieve superintelligence. They talk about it a lot — listen to the Dwarkesh podcast or whatever — it’s a popular subject, but that’s probably substantively more important than what’s in the daily news cycle.

Theories of Political Change 纳特·西尔弗思想

Jordan Schneider: There’s a myth of a software engineer who’s annoyed by something in the Spotify app, joins Spotify for two weeks, fixes it, and quits. I’m curious — what would you do during such a stint if you could plop yourself down anywhere in government?

Nate Silver: Let me redesign the Constitution, I guess. Maybe we need a ban on gerrymandering and we need to change the Senate. To some extent I’ve done that. I was dissatisfied with the way elections were covered, so what I thought was a little two-week project became a life-altering career.

I do feel like there’s maybe a little more openness to improvement. New York City has a new subway map now, which is much more legible. I saw that the other day — that was a nice little improvement. I could be a good restaurant consultant: “This restaurant’s not going to work. I live in this neighborhood. Nobody ever walks on this block. They walk on 8th Avenue and not 7th Avenue — I can’t explain why."

Being a copy editor, I guess. I notice copy editing problems in advertisements and things like that a lot.

Jordan Schneider: Think bigger. Come on. What Cabinet secretary? What bureau? Jamaal’s saying, “I’ll give you any job, Nate.” Which is it?

Nate Silver: How to have a good poker scene in New York — we’ve got to have poker rooms. We don’t need the rest of the gaming.

I guess I agree with the abundance critique where New York just takes an awful lot of time to build things. At the local government level, there often are incremental improvements made in different ways. In New York, our new infrastructure projects — LaGuardia Airport, the other airports, the West Side development — they’re all nice. It just took too long and was too slow.

But Jamaal is to my left, I think. I do think that he’s enough of an outsider and bright enough that he might do those smart, experimental things that don’t just fall under bureaucracy and inertia. I don’t know — was it Japan or Korea where they have little lights embedded in the sidewalk? That’s pretty cool. Just little things. If you’re looking down, you know when to cross or when not to.

Jordan Schneider: You’re a “department of special projects” guy — “let’s just make life better on the margin a little bit.” Or we’re going to let Nate Silver rewrite the Constitution. More barbell theory.

Nate Silver: Think big, everything’s small. Absolutely.

Jordan Schneider: Okay, let’s do barbell theory again with money. Say SBF hits and you’re just his advisor, and you got billions of dollars to spend on stuff — maybe not dumb stuff. Where are you putting your marginal $10 billion of philanthropy around politics?

Nate Silver: I don’t think politics is a very effective use of money. If it is, it’s at the local level. If you look at projects that were really successful — one of the most successful projects in American history is the conservative movement’s multi-decade effort to win control of the American court system. Supreme Court justices serve five times longer than presidents on average. Doing ground-level, long-term work is quite valuable. The notion of how to make government more efficient matters.

Jordan Schneider: Let’s lean on that for a second because one of the things that story did was invest in ideas and people and the intellectual superstructure for this movement. There don’t seem to be — maybe we’re starting a little bit now with the abundance stuff and Patrick Collison funding progress things — but there seem to be a lot fewer center or left billionaires who are investing in the interesting intellectual ecosystem to grow the movement.

Nate Silver: You probably see more of it on the right. The Peter Thiel Fellowship program pays kids to drop out of college — that’s an interesting ideological project that has produced some degree of success. The effective altruists would say that you want to purchase anti-malaria mosquito nets in the third world, and probably that’s very effective.

As much as there are lots of inefficiencies in politics and government, it also reflects the revealed equilibrium from complicated systems and complicated incentives. Maybe change is harder to achieve. It’s part of the reason why I’m reluctant to give off-the-cuff answers.

There have to be improvements you can make in government efficiency. Why does it cost 10x more to build a mile of subway track in New York than in France or Spain or Japan? I also think there are probably a lot of really sticky factors explaining why that’s the case.

In principle, I’d be on board with a project like DOGE. But DOGE should have spent the first three months studying this — not a fake commission, but actually studying where’s the overlap between problems where there really is inefficiency and where it’s tractable and solvable. That’s not something you could answer off the cuff or just by looking at a spreadsheet.

Jordan Schneider: Would you ever join a campaign?

Nate Silver: No, I don’t think I’ve ever really been offered that, believe it or not. It goes against my ethos — I want to study and have the outside view on politics. Campaigns are pretty rough. It’s very tough because you basically have one outcome: do you win or lose? In the presidential primaries, you have 50 states and they go in sequence, so it’s a little bit better. But it’s very hard to know what worked or what didn’t.

Kamala Harris and Donald Trump had lots of different, subtle, nuanced strategies. The fact is that if inflation had peaked at 4% instead of 9% in 2022, then she might have won, having nothing to do with strategy per se. It’s very hard to get feedback on campaigns.

I’m skeptical that you can gain as much through better messaging as you might think. It becomes saturated. The first time the next candidate uses Jamaal-style messaging, they’ll probably get something out of it. The fifth one who does it might backfire because it seems like a bad facsimile of what came before. Just like there are various Obama imitators or mini-Trumps, people like novelty — and they like some sense of authenticity.

What seems authentic is very tricky. Trump, in many ways, is a very fake, plasticky person, and yet he has this ironic, almost camp level of authenticity that would have been hard to predict in advance. I was not alone in 2015 when he’s going down the elevator at Trump Tower to think this is a joke.

This sketches the limits of prediction in general. The world is complicated and dynamic and contingent and circumstantial. Social behavior is contagious, and the focal points created by the media and the internet do more of this, where things can change unpredictably and rapidly. There’s less political science in running campaigns than in a lot of other fields that might possibly be in my interest set.

Jordan Schneider: It’s interesting because the big tactical decision that people are still talking about is, why didn’t she go on Rogan? Well, even if she did, it wouldn’t have gone well because she doesn’t vibe with that. It’s a bit of a revealed preference — she didn’t do this open-ended media appearance because she doesn’t fit it.

There’s an aspect of — you can only “Manchurian Candidate” your candidate to go so far from their essence as a human being. Until we’re electing AI models, people can still just get a sense for whether they like or dislike people. That’s probably 2 or 3% marginal.

Nate Silver: When President Trump got shot, it was a very sympathetic moment. I never voted for Trump and wouldn’t, but even then I felt some sympathy. That moved the polls by one or two points. One of the most momentous events of the past 50 years of American campaigns.

When Biden had the worst debate in presidential history, that moved the numbers by maybe 2%. It mattered because he was already behind and now he fell further behind.

There are times when preferences are extremely plastic and times when they’re extremely sticky. Knowing which is which, which interventions work at which intervals, is probably important.

Jordan Schneider: Has covering politics shaped your view on the great man versus structuralist view of how things unfold?

Nate Silver: The empirical default is to be more structuralist — that’s the trendy thing. I don’t know. I think Donald Trump is a very important figure. If Donald Trump had been a stand-up comedian instead — Trump is funny — the world would look a lot different. Elon Musk has been very important to the shape of the world. I’m not saying positively or negatively, but Xi is very important.

Things are trending more that way. I’m not some Elizabeth Warren type, but if the top richest people in the world basically double their wealth every decade — and it’s different people cycling in and out of the list, but if you look at the inflation-adjusted list of the richest 10 or 100 people, and it’s often more about the 10 now than the 100 — they basically double their wealth, inflation-adjusted, every 10 years. You compound that over several cycles, and that concentration of power might shift things more toward a great man theory.

I’m sure there’s a substantial degree of randomness too. I have no idea how China formed, for example, but you get in these feedback loops where you have a virtuous loop or a less virtuous loop. Thirty years ago, Americans were worried about being overtaken by Japan. That happened to Japan a few times, and in some ways it’s still a very amazing, advanced society.

Maybe an example is Rome, Italy, which is one of my favorite places to travel to. I’ve been there at different points in my life. There are parts of Rome where if you go today in 2025, they don’t really look that much different than when I was a college kid in 1999-2000. Except for the cell phones, you could really be placed in 2000 and you wouldn’t miss a beat.

Jordan Schneider: Slight non sequitur. My favorite dad book is called An Italian Education. It’s this memoir of a cranky British person who married an Italian woman and is talking about raising their kid in early 1990s Italy. It’s really fun, kind of New Journalism-style writing. But it’s also this fascinating portrayal of this country at a big transition moment where they’re going from being super Catholic to more modern, and from being very localized to conceiving of themselves as part of this European project.

I don’t have great 2025 Italy takes, but it’s interesting just how much further — or not further — a country could have gone from that moment to today, looking at different parts of the world from 1994 to the present.

Nate Silver: I have a friend who’s Irish — actually Irish, not Irish American — who moved here in early adulthood. He’s gay, and he’s told me, “When I was growing up, Ireland was very Catholic and very anti-gay, and now they almost go out of their way to be pro-LGBTQ rights.” It is interesting how much countries can change.

Conversely, Russia and that sphere are separating a bit more. The United States is diverging more from Europe too. Europe hasn’t grown a lot economically — there’s not a lot of innovation there. At the same time, their lifespans are increasing and they’re taking this slower-growing dividend into quality of life. Whereas in the U.S., male life expectancies, even if you ignore COVID, have basically not increased in a decade.

We are getting wealthier. I worry a little bit that as we’re doing things that undermine American leadership and state capacity, we’ve been playing the game on easy mode because the dollar is a world reserve currency and the U.S. is the world’s biggest military. We’ve been — this is complicated relative to a low bar — a relatively trustworthy player in international relations. If we’re throwing those things away, there might not be an impact next year, but when you visit these different countries and extrapolate out 3% GDP growth versus 1% and compound that over 20 years, it’s extraordinarily powerful. You see it on the ground where these places are stagnant and these places are growing.

On Podcasting (Plus: Renaming ChinaTalk?!?)

Jordan Schneider: You interviewed a lot of really rich people for this book. Why do they all want to start podcasts?

Nate Silver: They do love hearing themselves talk. It’s not just that they’re rich. These are people mostly in competitive fields — a lot of them venture capital — where they’ve had success and it goes to their head. You see this in poker — being on a winning streak in poker is helpful. It improves your attitude, makes people fear you. But you can go on “winner’s tilt,” it’s called.

It’s very hard when you’re powerful — people start catering to you. “The Emperor’s New Clothes” is one of the more accurate fables. That and “The Boy Who Cried Wolf” are the two eternal fables that describe human behavior extremely well.

If you’re somebody like Elon Musk, he has made several really good bets, whether they’re skilled bets or luck. If the fourth SpaceX rocket had blown up — even he told Walter Isaacson that he was going to be cooked. But it’s very hard if you’re one of these people who has had that kind of success.

One thing I’ve learned, Jordan, is that there’s always one more tier of wealth and power behind a closed door than you might expect. There’s always one more privilege level. Even at an event that’s already privileged, there’s a VIP room and a VIP room within the VIP room. The biggest VIPs of all are not even at that — they’re already at the after party.

There are smaller worlds too. Keith Rabois told me there are really only six people in all of Silicon Valley that matter. Which is an exaggeration, but maybe not directionally wrong. Maybe a few dozen. They all know one another.

With the tech types and VC types, they feel embattled by their employees who are “too woke” and the media being mean to them. People are mean to me on the internet sometimes too.

Jordan Schneider: Regarding your idea that there’s always one level up — if that’s your theory of the world, then why do you want to have an audience of 10,000 people listening to you talk about the news?

Nate Silver: Part of what I’ve done is go from a giant platform. I used to work for ABC News, which is about as mainstream as it gets. The average watcher is 70 years old at an airport somewhere or maybe a retirement home. Now with Substack, it’s an audience that turned out not to actually be narrower because the notion of building an email list is a good business model and very sticky.

At first, the stuff that goes behind a paywall is definitely reaching fewer people, but they’re willing to pay. You’re self-selecting too. Because the work I do, especially when it comes to election forecasting, is so easily misinterpreted, I don’t mind having a filter for people who come to the problem with more knowledge. It also can make the writing sharper.

If I’m freelancing with The New York Times, I have a good editor over there. They’re often saying, “Slow down, you have to explain this thing better.” It’s nice to have a conversation where you’re starting with certain premises and memories. I’ve stated complicated things before about my political views on issues that might come into play, or I’ve disclosed that I consult for Polymarket. It’s a cumulative project, and inherently nobody has time for everybody’s cumulative project.

Having a smaller audience of even dozens of people, hundreds, or tens of thousands is pretty important. Especially if there is maybe an unfortunate degree of concentration of influence and wealth and power. When I was working on the book, one of the things that surprised me is how many people I had no connection with were willing to have a conversation with me or at least provide a polite response if they had a good reason not to.

It’s a pretty small world and people talk to one another. That’s something that’s shifted a little bit again, maybe toward more of this Great Man theory — although all of them are men — toward this theory where individual agency matters more.

Jordan Schneider: My two cents on this is there’s a big cognitive bias for Keith Rabois to want to think that he is the center of the universe. There are more times than you would think where you have the market providing the discipline, or the people, or bigger sentiment when something blows up, where politicians or the media end up reflecting mass opinion more than they do the opinion of three people who are trying to pull the strings.

Nate Silver: One tip I heard in poker recently is that everybody is the main character of their own poker story. If I got caught making a big bluff against a third party earlier in the hand, and you’re sitting at the table not involved in the hand, Jordan, you might not even notice that — you’re going to be on your phone.

If you got bluffed by another player earlier and I’m not involved, that affects my strategy against you much more than what I did before, because I’m not involved in your narrative except to the extent I affect you. Life is often the same way.

Jordan Schneider: To close, I have an inside baseball question. Silver Bulletin is a great name. ChinaTalk... I don’t know, I think I should get out of it somehow, but I don’t want it to be The Jordan Show.

Nate Silver: People didn’t like the Silver Bulletin name at first. I came up with it in three minutes. I thought Twitter was gonna die, and I needed some placeholder. A lot of names are stupid when you think about them. Sports names are kind of dumb — the Green Bay Packers is kind of a dumb name. But it just sticks, and it seems normal because people repeat it over and over again.

Jordan Schneider: It’s not that I have names I’d be fine with. It’s more that the switching cost isn’t transparent to me — how much it’ll change listenership or open rates or whether it puts me on a larger growth trajectory. I wouldn’t change my coverage to do less China. Right now, it’s still 50% China, but it would just send a signal that it’s more than China here.

Nate Silver: That’s an interesting case. If it’s just a name, it’s a little awkward because it does include an implicit premise for what the subject is. Most of the time I would say the switching costs are actually pretty high. To sacrifice brand recognition is costly. Maybe you need to start a sub-brand or something. That’s a trickier one than for most people.

Jordan Schneider: The other thing is that advertisers are terrified of China. That’s just reality.

Nate Silver: Well, that tips it over to...

Jordan Schneider: Yeah. It’s more once I have a big contract, once I have Google telling me, “Jordan, we’ll buy $500,000 of ads only if it’s called the Jordan Schneider Show” — then you’ll know we’re doing it.

Nate Silver: We’re doing it for 500k. That’d be worth it.

We’ll take new podcast names in the comments below…

Nate said he was into shoegaze so his mood music is: