浦睿文化评论: 王维十五日谈

浦睿文化评论: 王维十五日谈评价: 力荐

大家好,这个频道开播9个月,收到了听众15000多条留言。很多听众讲了自己的故事,每个人的经历不同,却有着同样精彩的人生。今天,我讲一段自己的故事。跟很多听众比起来,我的人生比较平坦。

几年前,我们一伙人骑自行车穿越美国,每天晚饭后,在营地大家围到一起,轮流讲自己的故事。骑到得克萨斯的时候,有两位队友Debrah和Cathy,说想听我讲讲自己的故事。Debrah住在纽约,Cathy在俄亥俄一所大学教书。

得克萨斯中部是起伏的山丘地带,被称为“Hill Country”——“山丘国度”。过了圣安东尼奥,Debrah又问我的经历,我说我的人生比较平坦,缺少精彩故事。Cathy在一旁笑着说:“Ah, As flat as the Texas Hill Country”——“嗯,跟得克萨斯的山丘一样平坦”。平常Cathy说话不多,但总能说到点子上,话中充满张力。“跟得克萨斯的山丘一样平坦”,算是平坦中有些起伏吧。有起伏,就有张力。

我是1984年高中毕业,考不上正经大学,被分配到教师进修学校,培训两年,去中学教书。那时候,中国到处缺中学老师。因为中学老师收入低,社会地位也不高,大学毕业生不愿当老师。中学是个死气沉沉的世界。看到周围的同事,他们每天的生活,我不想像他们那样过一辈子,想教两年书就离开。但是去哪里呢?对于一个没有大学文凭的人来讲,出路并不多。

那时候,我想不管怎么样,先把英语学好。我跟学校的一位英语老师学英语,他是归国的印尼华侨,英文水平比其他英语老师要高。我也参加自学考试,想拿一个本科文凭,但没能毕业,放弃了。一位听众在留言区说,她参加过自学考试。而且拿到了文凭。听到这种经历,觉得很亲切。

后来,我决定考研究生。那个年代,有不少通过考研改变自己命运的年轻人。但那条路并不平坦。越到社会底层,各种人为的障碍越多。最后坚持下来的往往不是那些最聪明的人,也不是起点最高的人,而是最有勇气、最有毅力的人。很多天分比我高、条件也比我好的人,遇到阻力就放弃了,一生憋闷在他们不喜欢的地方,每天重复不愿过的生活。转眼,大家已经到了退休年龄。

那时候,基层单位领导权力很大。我们要考研究生,领导不干。我们当地有个“英语角”,大家星期天聚到一起练习英语,相互鼓劲,也切磋对付领导的办法。英语角离省立图书馆不远。我们也经常去图书馆抄书。当时,很多书买不到,我们在图书馆阅览室借出来抄,时间长了,就有了手抄本。那时候,大家的想象力比较丰富,不管以前是学什么的,干什么的,考研的时候,都想报自己感兴趣的专业。一位农业大学毕业的兄弟,是研究葡萄的,但他对社会学感兴趣,报考了南开大学的社会学专业。毕业后,去了北京工作。

一位跟我一样没念过本科的兄弟,报考山东医学院,也考上了。放到现在,都是不可想象的。那时候,图书馆阅览室的条件很差,夏天没有空调,只有电风扇。有些去看书的人,还大声讲话。那位考医学院的兄弟,脾气有点火爆。有次,我们一起看书,有对男女在阅览室大声说话,他让他们小声点。可能那个男青年,觉得在女友面前没面子,要过来打架。这位兄弟从书包里掏出一把半尺长的尖刀,站起来就迎了上去。那个要打架的青年,马上没话说了。

英语角有位兄弟,是我的人生楷模。他只念过大专,考研究生考了三年,去了北大。前两年,他并不是没考上,而是考上了领导不放档案,人走不了。他的用功程度可以说是头悬梁锥刺股,这么强大的动力来源于不愿重复周围人的生活。那种逆来顺受的生活像一潭死水,让青春窒息,让灵魂麻木。

Last week, Jensen Huang said that China is “nanoseconds behind” the US in chipmaking. Is he right? Today, Chris McGuire joins ChinaTalk for a US-China AI hardware net assessment. Chris spent a decade as a civil servant in the State Department, serving as Deputy Senior Director for Technology and National Security on the NSC during the Biden administration and back at State for the initial months of Trump 2.0.

Today, our conversation covers:

Huawei vs Nvidia, and whether China can compete with US AI chip production,

Signs that chip export controls are working,

Why Jensen is full of it when he says China is “nanoseconds behind”

What sets AI chips apart from other industries China has indigenized,

How the US has escalation dominance in a trade war with China, and the significance of BIS’s 50% rule,

Chris’s advice for young professionals, including why they should still consider working in government.

Listen now on your favorite podcast app.

Jordan Schneider: When thinking about AI hardware between China and America — or the global friends manufacturing ecosystem — what are the relevant variables?

Chris McGuire: You’ve got separate production ecosystems. There’s the US production ecosystem that is largely designed in the United States and manufactured largely in Taiwan. Then there’s the Chinese AI ecosystem, especially for AI chips, because we’ve separated them through regulations. Chinese AI chips are made in China. They’re not made at TSMC anymore; they’re designed in China. We’re talking about two separate ecosystems.

Fundamentally, it comes down to the quantity of chips they can make and the quality of those chips. The important thing here is what matters. There are a number of variables, but the key factor is the aggregate amount of computing power. You can aggregate large numbers of worse chips to a point — not like Pentium II chips, but assuming you’re talking about reasonably sophisticated AI chips, you can aggregate large numbers of them to produce very large amounts of computing power. What matters is the aggregate quantity of computing power, which is a function of quality times quantity of the chips.

Jordan Schneider: Let’s start with quality, because we had some interesting news come out of Huawei over the past week. Alongside Alibaba, they announced their roadmap for their AI accelerators over the next few years. It’s interesting because there are numbers attached to what they’re promising their engineers and customers that you can then compare to what Nvidia has told its customers and investors. What was your read on what Huawei is projecting on the quality side to be able to do over the coming years?

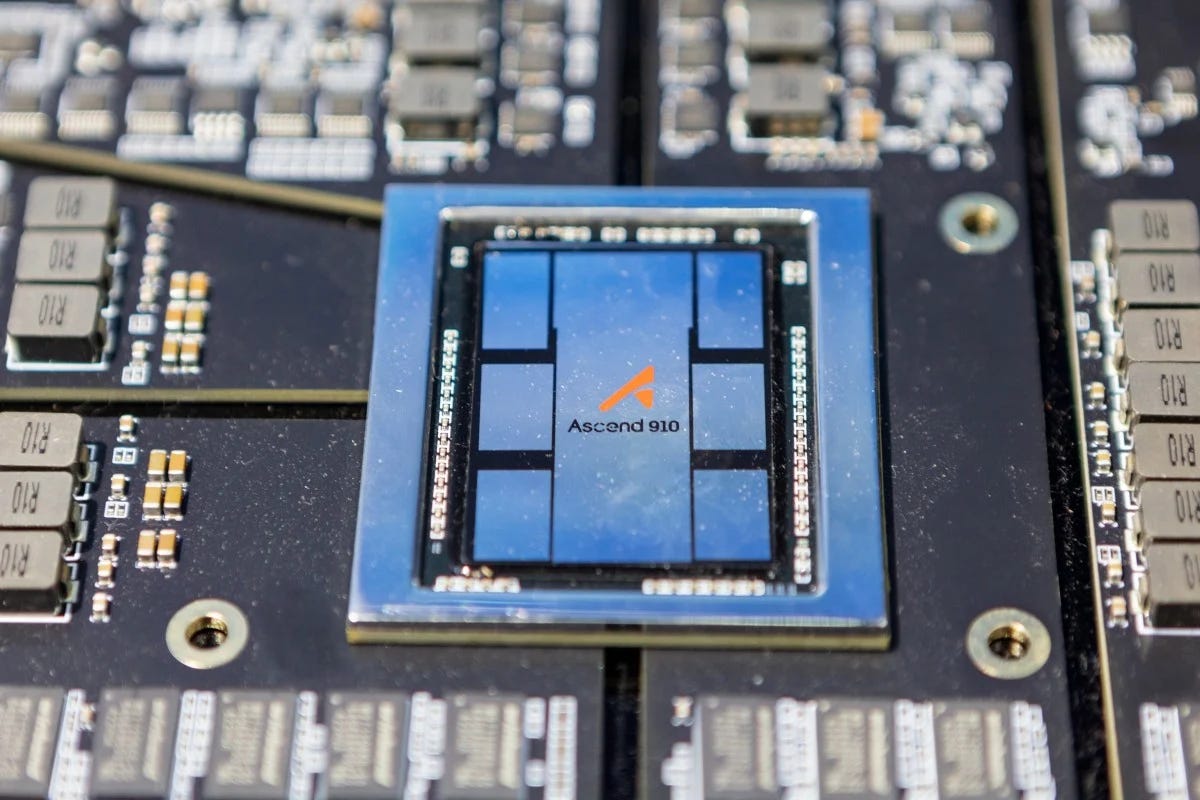

Chris McGuire: There’s a lot of hullabaloo around this announcement. Huawei was projecting out to 2028 and saying they’re going to make all these great AI chips. But actually, a lot of the coverage didn’t dig into the details of the announcement that much. When you do that, especially when you compare it to Nvidia, AMD, or other American companies, you see that they’re stalled. This makes sense because they’re probably stalled at the 7-nanometer node, which means they’re not going to benefit from increasing transistor density in the ways that ours are. They have to find other ways to make their chips better, and that’s very hard. There’s a huge avenue of quality improvement where they’re stalled out.

To give you an idea — their best chip today is the Ascend 910C, which is two 910B processors that are packaged together into a single chip. On paper, that has around the performance on paper of an H100, though a little worse. There’s a lot of reason to believe that, in terms of actual performance, it performs quite a bit worse. But if we’re looking at the stated teraflops of the chip and also the memory bandwidth, it’s around the same, a little bit worse.

Given that, the question is where they go from here. The interesting thing is their roadmaps for the chips are coming out. Keep in mind, the H100 was a chip that came out three years ago, and the best Nvidia chip now is about four times as powerful as that. If you look at where their roadmap goes, they won’t produce a chip that’s better than their best chip today until the end of 2027. The chips they’re making next year are going to be actually lower in terms of performance and lower in terms of memory bandwidth — at least one of them will be — than the 910C.

There could be some technical reasons for that. It could be that they’re moving to a one-die rather than two, so maybe they have one die that is slightly better than the 910B die. There could be other reasons for that. We don’t know how many 910Bs were made at SMIC. We know that a lot of them were made at TSMC and illegally smuggled in, which is a longstanding enforcement issue — there was a big problem there. We know that, but we don’t know how many were made at SMIC. Maybe a lot more of them were made at TSMC than we think, which would be bad from an enforcement perspective and pose a strategic problem. But from a question of what SMIC’s capacity looks like going forward, that would be good news for the United States. It means they’re struggling to make chips. Again, we don’t know that, but it’s a possibility.

Meanwhile, if you look at Nvidia’s roadmap, the chip they’ll make in Q3 2027 is projected to be 26 times the performance of the Huawei chip they’ll release the same year. What we’re seeing is a huge performance gap. There’s a big performance gap right now — probably around 4x between our best chip and their best chip. Based on the stated roadmaps of Nvidia and Huawei, that’s stated to increase by a factor of six or seven over the next two years. That’s significant.

Jordan Schneider: The Huawei fanboys would come back at you, Chris, and say, “Chips, who needs them anyway? We’re talking about racks and the Huawei AI CloudMatrix. Huawei’s got some optical magic to take their chips, and even though they’re not as power performant, we’ll dam up some new rivers and figure that out on the backend.”

From a quality perspective, how much can you make up the gap, abstracting up one level from chip to system?

Chris McGuire: There’s a big question of how much you can aggregate chips together. When we were doing this analysis in the government in 2024 at the NSC when I was last there, and also in the analysis I was doing at the State Department earlier this year, the operating assumption was that there’s not a cost to aggregation. There could be some, but it’s difficult to model. Frankly, that seems to be something that the Chinese could overcome. I don’t doubt that they’re making good improvements on the CloudMatrix system.

But the key thing there is, number one, we always assumed that they would be able to aggregate the chips without any loss. The lack of loss is not that surprising. But number two, what matters is how many racks can you make. It doesn’t matter how many chips you can put in a rack if you can’t make that many racks. It comes down to the production quantity question. If they’re putting 15,000 chips in a rack but can only make four racks, then it doesn’t give them that much advantage.

Jordan Schneider: It’s fair to say that there are other design firms in China making AI chips, but we can round them down to zero. No one is going to be doing stuff dramatically better than Huawei anytime soon. With that in mind, let’s turn to the quantity side of the ledger. Where do you want to start us, Chris?

Chris McGuire: This is an area where there is some fierce debate publicly. The US Dept. of Commerce said Huawei can only make 200,000 chips this year. Many others say they could make millions of chips this year. There’s legitimate uncertainty here. Personally, if the government put out a number, there’s probably good reason for that. But let’s entertain the uncertainty.

The number of chips they can make is a function of what their yield is on the fabrication, what the yield is on the packaging, and their allocation of AI chips to other 7-nanometer needs — smartphone chips, et cetera — where there’s huge production. They do have a huge interest in having a domestic smartphone industry. We’ve seen that they make over 50 million smartphone chips a year. When you combine all that, the question is: how many can they actually make?

My takeaway from Huawei’s roadmap is that because they are not getting significant scaling advantages on chip quality — which again makes sense given they’re not advancing in node — and they also face other significant constraints that we haven’t talked about yet on HBM, which they’d have to supply domestically, they’re more or less stuck on chip quality and advancing very slowly. They have to massively ramp production quantity in order to compete with the United States.

It’s unknown where they are right now, but let’s say that they’re 10x behind us. It could be closer to 50 to 100x. If the gap between us is then increasing by a factor of six or seven over the next two years, they’re going to have to make up 60 to 70x times production — assuming they’re at 10x — to reach our level of aggregate compute capability. That’s probably impossible. The quantity that they would need to scale to is so high that it presents significant strategic problems.

Jordan Schneider: This is the key distinction that folks don’t price in when they try to make the EV or solar or even telecom analogy. You have the entire weight of global capital now pouring into Nvidia chips manufactured with TSMC. That isn’t the same as with Nortel and Ericsson, or Ford not caring about EVs, or solar companies barely existing in US manufacturing. We are ramping on both a quality and quantity perspective at a truly world-historic scale.

It’s not that China is competing with a zombie industry in the West or something that China has identified as the future that the West hasn’t. Huawei and SMIC are having to compete with the flagship of global capitalism at the moment. While having the challenge — there are loopholes, there are challenges with the export control regime — the fact that you are not allowed to get tailwinds from TSMC anymore, or there are hiccups in what tools you are and aren’t allowed to buy, makes it a tall order to replicate all this domestically at scale when you are competing with the rest of the world as a collective unit.

Chris McGuire: That’s exactly right. It is not all those industries where they’ve been able to leapfrog in production or where we have ceded our interest to China. This is the linchpin of the global economy right now. Not only do they have to catch up from way behind, but they also have to do it without the equipment that we’re using. The equipment that we’re using, to be clear, that the Chinese don’t have — these are the most sophisticated machines that humans have ever made.

There’s a logical argument here: “Hey, China’s good at indigenizing stuff.” They are. They’re great at it. We’ve seen this in industry after industry.

Those are the most complicated machines on earth. They could do everything — it’s logically possible they could indigenize everything on earth except for an EUV machine, not to mention many of the other tools that are also sophisticated and used in the production process. That’s why this is a unique sector.

We have to be on guard because it is super important. It is the foundation of US technological supremacy. It’s an area where we should take few risks, because if this one goes away, a lot of other things follow from there and it becomes problematic. But we are protecting it decently right now, and we should make sure we have 100% confidence in that. But I don’t see the numbers here — the Huawei chip design numbers or their production numbers — and think there’s about to be an all-out competition where they’re going to equal our companies and we’re going to be on equal footing globally, competing for markets around the world. When you do the math and look at it, that doesn’t become a realistic possibility.

They will be able to produce significant numbers of chips, but not enough to be able to meet domestic demand for AI, given that the compute demands for AI are also increasing so rapidly. To understand this, you have multiple exponentials working at the same time. You have exponentials in terms of chip design getting better. There’s exponentials in terms of production capacity, although that one’s more linear. And then there’s also exponentials in terms of compute demand. It’s very hard for China to make up all of those simultaneously, which is good news. That’s great for us.

The fact that there’s so many headlines celebrating the breakthroughs — it’s all relative to where they are. Look at the Bloomberg headline yesterday that said Huawei is going to make 600,000 Ascend chips next year and that’s going to be double their production. This shows that they’re doubling production and they’re competing. That means that they’re making 300,000 chips this year, if that’s true, which validates Commerce’s numbers of 200,000. You’re in the same ballpark. And that is a very low number.

600,000 GPUs is not going to be enough to fill the Colossus 2 data center that Elon Musk is building. Keep in mind, these are also substantially worse chips. Nvidia is making — Jensen said this year — 5 million GPUs total, and then each of those is probably five to six times better right now. But next year might be 10 times better than each Huawei chip. You’re getting to the point where we’re making 50 times more chips than they are.

It’s important for people to keep that in mind when they’re seeing all these headlines that say they’re catching up. But the math doesn’t check out when you see that. It’s possible there are breakthroughs and that number goes down, but we have a huge buffer. If we’re at 50x or 20x China or even 10x China, we’re in good shape relative to them. Again, my risk tolerance is very low and we should push that number as high as possible in the gap. But the headlines aren’t consistent with the math.

Jordan Schneider: Are there more numbers you want to talk about?

Chris McGuire: To give an idea of the quantity: if you assume Nvidia is making 7 to 8 million chips in 2027 based on current roadmaps, which is a 25% increase over 4 to 5 million in each of the next two years, that seems reasonable. We can nitpick with that, but it’s in the ballpark.

Let’s operate under the assumption, for the sake of simplicity — which is probably not accurate — that all the chips Huawei and Nvidia are making are their best chips. What that comes out to is Huawei would need to make about 200 million chips in 2027 to equal Nvidia.

In terms of production quantity, let’s be generous and say they’re at 30% fabrication yield, 75% packaging, and 50% allocation. That means they would need 11 million wafers — most of TSMC’s total production, which is 17 million wafers a year, devoted to Ascends.

If those numbers go down a little — and they’re probably lower than that — if you say it’s 10% yield, which is low but could be right, 50% packaging yield, and 25% allocation, then China needs to stand up an entire TSMC across all of TSMC’s production devoted to Ascends in order to make enough to equal Nvidia. That is not possible. It’s not possible that they can get the tools and have the capacity to do that quickly.

Jordan Schneider: Is this the right variable to be focusing on — Huawei total production versus Nvidia total production? Nvidia sells to the world — well, maybe not to China, TBD — but from a balance of national power perspective, should we only be counting the GPUs that are in the U.S.? Should we only be counting the GPUs that are in U.S.-owned hyperscalers?

Chris McGuire: That’s a fair question. Maybe we are providing for the world and they’re providing just for themselves. There’s a lot of debate and concern about whether China is going to be able to export AI to compete with us globally, which is absolutely something we should think about and consider. But for them to do that, they still need to fill their domestic market.

Their domestic market is going to be huge. Our domestic market is huge. A huge percentage of Nvidia’s production is going to the U.S. market right now. We’re at well over 50% of global compute.

Even if you slice it up a little bit and say, “Okay, China’s going to put all these efforts into a single firm, they’re not going to do anything internationally,” does that give them the capacity to maybe support one AI firm to be a real competitor in the Chinese market? Potentially. But you’re talking about significant constraints on their ecosystem there. It’s going to be very hard to compete with our robust and dynamic ecosystem at that point, and it’s still going to be difficult. That’s giving them a lot of generous assumptions.

Also, as the compute continues to scale, it’s scaling faster than China can scale production. There’s a fundamental problem they’ll face. In the next generation of models in 2028, 2029 — absent a massive indigenization of tooling — this problem will get worse for them, not better. Unless the United States lets up on its vice grip on tools and compute.

Jordan Schneider: It’s such a wonderful irony that America has been beaten on scale in so many industries over the past few decades. But once people get focused and once there’s enough money in it, then this is Rush Doshi’s “allied scale ” idea. Maybe America couldn’t do it on its own. Intel isn’t the one pulling their weight here. But when you add up the global ecosystem — what the European toolmakers can provide, the manufacturing out of Taiwan and Korea, the Japanese tool makers, and the design capabilities coming out of the U.S. — it adds up to something that China cannot be self-sufficient in at a scale which can compete over the long term globally with what America and friends have to offer.

Chris McGuire: This is the most complicated supply chain in human history. They’re very good at indigenizing supply chains — they’ve done that in numerous industries. But if there’s one that’s going to be the hardest for them to fully indigenize, it’s this one. The evidence says that they’re struggling.

They’re struggling partly because it’s hard, and partly because the United States, over multiple administrations of both parties, has taken some good steps to prevent them from moving up the value chain, and Huawei’s roadmap shows that it’s working.

It’s interesting because when you look at where Huawei was, designing chips is not a problem. The Ascend 910A in 2020 had better specs than the V100, which was the leading Nvidia chip at the time before the A100 came out in 2020.

Then the chip that they made was substantially worse. They’ve been forced to rely on their domestic production, which has been very hard for them to scale from a quality or quantity perspective to compete with the West.

Jordan Schneider: Cards on the table — I find this very compelling. A lot of your assumptions you’re taking from Huawei bulls. The 600,000 to 700,000 estimate is something that Dylan Patel wouldn’t disagree with, something he said in his own piece.

But the headlines that Huawei has been able to generate from its reporting — from having 100 chips in Malaysia to White House officials tweeting, “China’s expanding abroad” — what is it about Huawei’s messaging, American views of China, lack of technical sophistication among reporters? How have they been able to build themselves up as such a heavy hitter in this space when they’re really in single-A compared to the TSMC and Nvidia ecosystem?

Chris McGuire: There’s not a good understanding of how good our chips are. You could say maybe there’s not a good understanding of how bad Chinese chips are, but I’d flip that. Nvidia is an amazing company doing amazing things. They’re producing unbelievably powerful chips that keep getting better every year, and they’re also increasing the rate at which they’re coming out. They were previously on a two-year cycle; now they’re on a one-year cycle.

There’s a reason why the demand for these chips is completely through the roof, why they’ve become the most valuable company on Earth, and why all the next five most valuable companies are scrambling over themselves to get their product. They’re good, and no one else is able to do what they do. No discredit to AMD and others that are also making great chips — American companies are doing incredible stuff in this space, and that’s not fully appreciated.

Jordan Schneider: When you have Jensen Huang saying, “They could never build AI chips.” That sounded insane, and when he said, “China can’t manufacture.” China can’t manufacture? If there’s one thing they can do, it’s manufacture. Or when he said, “They’re years behind us.” Is it two years? Three years? Come on. They’re nanoseconds behind us. Nanoseconds.

When you have him saying that China is nanoseconds behind, it’s not true. He knows it, and his engineers know it. I’m sure they’ve done teardowns galore of Huawei architecture, and they know the same thing. What you’ve talked about over the past 30 minutes, Chris, is not secret. The reason that Nvidia is valued at $4 trillion is because of that fact. This is a widely held opinion. But you have their CEO — because he’s trying to shape a narrative that he needs to sell into China — saying something patently false.

Chris McGuire: I agree it’s patently false. You look at the data and it’s patently false. I will also say that everything I was saying was focused on total processing performance. You could make a valid point that memory bandwidth is also important. That’s what everyone’s saying about why the H20 needs to be controlled, which is correct.

How do they stack up in memory bandwidth? There is still a significant gap there as well. The Nvidia chips, even looking at the two roadmaps, are going to be 4x better, potentially 8x better on memory bandwidth too. I want to clarify: Franklin says, “Well, he’s just looking at one part of it.” If you look across the stack, the gap is increasing.

But to your question, we’ve created this perverse incentive structure. When we said, “You can’t export, this is the line, you can’t cross it. End of story.” It was simple. That’s the line. That’s it. That was the kind of “as large of a lead as possible” approach, because you need to hold the line and then the gains will compound over time.

If there’s a legitimate need for it, that’d be one thing. If there was massive quantity of these chips, especially if China was able to fill its domestic demand for AI compute with domestic chips, it would be a different conversation. We should think about what American companies should be able to export there. But that’s not the world we’re in because of the constraints on their production and because of the increases in AI compute needs. We’ve created this incentive structure for companies to overhype China.

There is one other element that’s significant, that we should be real about — there is a significant Chinese propaganda campaign about this. National Security Advisor Jake Sullivan even said that publicly once in 2024 at an event. We know that part of China’s strategy is to convince the West that their measures are futile and that they’re not working. Every single time there’s a breakthrough, there are five South China Morning Post stories talking about how amazing it is and how China’s crushing the West, et cetera. But that doesn’t make it true. That doesn’t change the math. It doesn’t change the dynamics. But we are susceptible to that.

The nature of our system is such that it gets traction here. Math is hard. It’s a convenient narrative that also fits the correct narrative in a lot of other industries. It’s easy to convince us that this is the same when, in fact, it’s quite different.

Jordan Schneider: Let’s talk about policy changes that could dramatically allow Huawei, SMIC, and CXMT — the Chinese memory provider — to inflect in a way that would make the multiples that you’re projecting for Nvidia plus TSMC to be ahead of them different over time. How would you rank the things you would be most worried about if the West started to ease off export controls and what it could do to the curves that Matthew laid out?

Chris McGuire: Number one is something that Dylan Patel highlighted in his piece and something that we haven’t even talked about here but is significant. Everything that I’m talking about is looking at logic die production, and that is a constraint. It probably is a constraint at a higher level because I’m assuming that they can scale that at 10x. It’s still going to be 5-10x below where we are.

But that’s something where, okay, maybe they can use the tools they have and keep acquiring equipment given the regime to build out their fab base significantly. They’re still going to be constrained by HBM because HBM is their number one constraint now. They previously had unrestricted access. They don’t anymore. HBM stacked exports have been cut off, so they’re running through their stockpile now. That will run out. They don’t produce very much HBM domestically. They’re going to be very constrained. CXMT has had some problems being able to produce HBM3 at all.

If CXMT is allowed to produce large amounts of HBM, that’d be a problem. Or if HBM controls are rolled back in the context of a negotiation and we change the policy so we can export HBM to China, that removes the biggest constraint that’s on top of everything I said that could push things down even further. That is probably the number one biggest obstacle they face right now, and maintaining that is very important.

The second thing is there are still a lot of tools that are going through. When I look at this, what I see is the controls have probably been more effective than the media narrative suggests because they seem to be struggling to produce very large numbers. If they’re making 600,000 chips next year, that’s a very low number and it’s not a competitive number for a national AI industry at the quality of chips that they’re making. That means that the controls are working now.

We should not take any risk. There’s some risk that they’re able to figure out much more effective means of producing chips. We could zero out that risk by clamping down on SME exports to China significantly. But that’s where we are now. They are still able to get a lot of tools, especially for non-restricted fabs. There’s a subset of tools that will make it next to impossible for them to advance, especially for them to do 5 nanometers.

But they could order very large numbers of tools and then scale their 7-nanometer production and large amounts of 5-nanometer production. The math means that’s still going to be insufficient. But why take that chance? If the status quo happens and they continue to buy large numbers and divert, that’s probably the point when they’re going to struggle. But can DeepSeek continue to make good, if not frontier models, while the rest of the ecosystem suffers? If they really centralize all efforts into one entity for the next one to two years, but not after that?

Jordan Schneider: The other way the balance of chips changes is if Nvidia gets to export to China. We had a very interesting arc over the first few months of the Trump administration where it seemed like they were going to ban H20 exports, then they unbanned them, and then China said, “No thank you — we actually don’t want this stuff anyway.” Chris, what is your read on that arc?

Chris McGuire: The most likely explanation is that this is a negotiating ploy. It would be foolish to turn down chips that would help them. There’s so much demand for AI compute that having H20s allows them to have their cake and eat it too. There would still be room for every single domestic chip.

China can protect markets and make clear that they’re going to ensure there’s enough demand. They can protect the market for exactly the number of chips that they’re able to make themselves. Once they guarantee all those are sold, every Nvidia chip goes in on top. That’s well within their power.

Jordan Schneider: That is a game that the Chinese industrial policy ecosystem is well practiced at. We’ve seen domestic suppliers slowly but surely eat market share as their capacity comes online — everywhere from shipbuilding to EVs to handsets. That is a normal trend.

But the retort would be that all these CNAS papers about chip backdoors have become paranoid that these are the same thing as Hezbollah beepers or something.

Chris McGuire: It’s possible, but if that were the case, then they wouldn’t want Blackwell chips either, because there’s an equal risk of Blackwell chips having backdoors as Hopper chips. It seems like they do still want Blackwell chips. That says to me that the stance on Hopper chips is more a negotiating ploy — “Hey US, if you’re so desperate to send us AI chips, then only give us the best ones. We’re not going to take the second-best ones because we think this is now a point of leverage that we have over you as opposed to the reverse.” If that’s the case, we should take that, pocket it, and move on.

If you think the Chinese system, does someone walk through this math with Xi Jinping and show him their numbers versus our numbers? Do they explain that because of the differences in quality, it’s going to be hard for them to ever catch up, and the slopes are working against them on the curves? Probably not.

That’s not going to be a briefing that people in an authoritarian system are incentivized to give their leader. They want to paint a more optimistic picture. If that’s the case, then maybe the leadership does believe that they’re going to catch up soon, in which case, more power to them — let them try. We should let them try without the benefit of massive amounts of US tooling as well. But that perception works in our favor.

Jordan Schneider: It was an interesting arc when the October 2022 export controls hit. I remember writing all these articles about what the Chinese response was going to be — certainly there would be retaliation, right? But there was reporting that they were like, “Eh, we’re fine. We’ll figure this out on our own.”

At that point, there’s a notable disconnect — while these restrictions are critically important for China’s semiconductor ecosystem, senior Chinese leadership and negotiators don’t seem to prioritize unwinding the Biden administration’s policies

Chris McGuire: The response at the time was “We’re going to indigenize and we’ll see you on the battlefield” — metaphorically — “We’re going to compete.” That’s admirable and is consistent with the history of most other industries. That is the response, and they’ve done very well at that.

The point here is that we think this industry is different. That was the case in 2022, and it’s the case now. The fundamentals of it are different from the industries that the Chinese have been so successful in. That doesn’t mean that they will not be successful here, but it’s going to be harder and we can’t assume it given they were able to do it in the past.

Jordan Schneider: Chris, what’s your read on why it took until Liberation Day for the rarest card to finally be put on the table?

Chris McGuire: First, Liberation Day was a significant escalation in a much broader element of the trade dynamic than anything before that. We’re talking about hundreds of percentage points of tariffs. That’s a fundamentally different escalation on the US side, so the Chinese were going to escalate to a greater degree as well.

Second, the Chinese now have a better understanding of these restrictions and have better tools to address them. They were taken by surprise in October 2022, and it took some time for them to wrap their heads around what tools they had to respond. That’s why we didn’t see any direct response in October 2022, but we did start to see a Chinese response in 2023 and 2024.

Third, this requires careful management. When the Trump administration went all out on tariffs, it became such a big escalation on both sides. But there are other cards that the United States has to deter China from retaliating against us. The Biden administration did think about that, and there was some careful messaging behind the scenes with the Chinese on this point, making them aware that we have a bunch of cards. The message was clear: we know what we’re doing. We have these actions in this space, and they’re consistent with our original objective. We’re very clear with you that this is the course of action we intend to take, and we’re continuing down that path. That doesn’t preclude various other activities that we’re discussing with you. But if you massively escalate in other areas, we have other areas where we can massively escalate as well.

The US has other ways to impose massive costs on Chinese companies in the short term. Any large Chinese technology company is still reliant on semiconductors from TSMC that are designed with US tools to continue to function and exist, and it’s within our authorities to take those off the game board immediately.

But if we’re not willing to use those tools or even talk about those tools, or the Chinese don’t perceive that we’re willing to, then it becomes a lot easier for them to escalate on rare earths and get escalation dominance over us.

Major export controls against major Chinese companies would be massively painful for them. Cutting any Chinese banks off the US dollar or anything like that would be massively painful for them. The US has escalation dominance. I don’t think we’ve been willing to use it, and that has reversed the dynamics here. But again, the fundamentals massively favor us.

Jordan Schneider: There was a lot of reporting that the Trump administration was surprised that rare earths were thrown on the table — shook, even — all of a sudden the administration thought, “Oh wow, this is bad. We need to figure out what’s going on and find a response.” Then you had stuff like the MP Materials deal. Encouragingly, last week, Bessent started to sound cranky and said, “Look, aircraft engines, chemicals — we can take this in a lot of directions that you guys [China] are not going to enjoy.”

Xiaomi and every Chinese handset manufacturer need TSMC to provide reasonable domestic products and compete globally. These are things that will take a week for the pain to be felt, and where the solution to them is more painful than putting in some mines and building some refinery plants in Australia.

The idea that America has ceded escalation dominance on economic coercion because China found something that made the US feel some pain boggles the mind. I thought President Trump would say, “Fuck it. We’ll play that game.” It is unfortunate that he seems to be more willing to play this game against allies than against adversaries. But maybe this is changing with the Bessent talk and with the Putin Truth Social post from last week. We will have three and a half years of this. I am confident that we will reach a point when this game will be played again and Trump will be ready to pull more economic triggers. We’ll have to see.

Chris McGuire: To give a concrete example of where we could mirror — the controls on magnets had a significant impact on our auto industry. US auto firms were saying, “Hey, we’re going to shut down soon if this doesn’t get solved.” That is a strategic problem for the United States.

I wonder how long BYD 比亚迪 would be able to operate without access to US technology or chips from TSMC. Probably not that long. Many BYD cars use 4-nanometer chips to run their ADAS systems. BYD has done a great job indigenizing most of their supply chains, everything from legacy semiconductor fabs to the ship carriers that move the cars around the world are all owned and made by BYD. The one thing that they have not been able to indigenize that they still need for their sophisticated chips is advanced semiconductor production.

Things keep coming back to this, and it becomes a little repetitive — “Oh, you guys keep talking about chips” — but it’s because it’s the foundation of so many products and it’s the area where the US has advantages. In this example, a tit-for-tat escalation would have been: “Hey, our auto firms are about to shut down because we don’t have rare earth magnets. BYD is going to shut down because they’re not going to get chips until we resolve this.” Then we could pull both those back to make sure that we’re not taking either of those actions while continuing to take the necessary separate actions on AI. That’s one way we could have gone about it and could still if this rears its head again.

Jordan Schneider: On Trump 2.0 tea leaf reading, we had a BIS 50% rule, which is something that the Biden administration never got across the finish line. What is it? Why does it matter? And what does it imply about the future of policy?

Chris McGuire: This is a good change that BIS made this week. It’s important and will have a significant impact. It may not affect AI chips directly, but will have a substantial trickle-down effect on all of export control policy.

The way that export controls work is there are certain things that are controlled countrywide — and those are the most important and robust controls that we can implement. But for a number of other things, we control them to entities of concern. The entity goes on the entity list, and then all US exports — or many, depending on what the licensing policy is — are blocked to them.

The way it worked until this week was that every single subsidiary had to be specifically listed on the list. If it wasn’t listed, then exports were okay. It was a presumption that exports are fine unless the subsidiary is specifically listed. This creates massive loopholes and is easy to exploit. Someone can create a subsidiary that isn’t listed, and then it becomes easier to export to them. There are various due diligence requirements, but that checks a lot of boxes and makes it much easier for firms to export.

That means any company that is a wholly owned subsidiary of Huawei or SMIC or others — CXMT, YMTC, et cetera — are now on the entity list, whereas before they were not. That’s a significant change.

This also applies globally. It applies to Russia, it applies to Iran. There was a shell game that a lot of entities played and it never made sense. The Treasury Department, with respect to sanctions, has exactly this rule. They say if we list an entity on the SDN list, then if there’s an entity that’s majority-owned by one of those firms, it’s also covered. We expect people who do business with entities to do their own research and make sure that they’re not inadvertently working with companies that are on the SDN list. If you do, then you are held responsible.

Export controls are going to work the same way now, and they should. There’s no reason why one should be fundamentally different from the other, given what we’re concerned about — the diversion risk is substantial. This is a good change. To the point of where export control policy and China policy are headed and how this will play out over the next few years, this is indicative of the fact that we don’t really know. This is a good change, filling a big loophole that the Biden administration was not able to close.

It’s something the Trump administration has talked about from the beginning. Despite all the trade talks and narrative that everyone is walking away from controls, this action was still taken. That shows that there are still people who want to rebalance the relationship in ways that are in our interest and fix the loopholes in the tools we have.

This isn’t a perfect solution. There will be Chinese counter moves. Chinese companies will create shell companies that own 51% that aren’t affiliated with the parent in order to get around it. It will still be a whack-a-mole game. That’s why technology-based controls are going to be most important, because that’s the only way we can be sure that we’re not playing whack-a-mole. This will make companies think twice. It will increase the amount of due diligence that’s necessary and closes loopholes that were being abused as of last week.

Jordan Schneider: Chris, what is your thesis on the loopholes and the fact that we would record ChinaTalk podcasts about them three days after the regulations came out, and then they would change maybe six or twelve months later?

Chris McGuire: I lived a lot of that.

One point is that government is about compromise. These things are hard, and they do have — or have the potential to have — significant impacts on US businesses. There’s a lot of lobbying from businesses. It’s one thing when you’re on the outside of the government to throw stones, but it’s different when you’re making the decisions that are going to reshape industries and economies. People are careful, particularly Democrats are careful and deliberative. That means the default is to be cautious.

The totality of the approach that has been taken — and has been taken bipartisanly in Trump 1 and Biden administrations — was an assertive and different policy than the United States typically takes with respect to technologies or economic issues, and it’s important to keep that in perspective.

There were some loopholes that people pointed out right away — things that some of us tried to fix and weren’t able to. Sometimes that’s because of US industry concerns. Sometimes it’s because of working with allies, and those were tough negotiations where we weren’t able to get everything we wanted, but we were able to get a lot. Sometimes it’s that regulating on the frontier is hard. The government has gotten better about doing that starting in 2022. There were some big fundamental errors in the first 2022 export controls that did take a long time to correct. That is a function of how long it takes to get something through the system.

The controls in 2022 had loopholes that were a result of technological developments that happened while we were developing the controls. That is something that’s going to happen in this space, and the government has to be nimble in responding. The failure on the government’s part with respect to those controls was in its slow response. We should be able to fill loopholes quickly and agilely while also admitting that they’re going to happen. We should do our best to make sure that they don’t, but as long as we’re able to fill them quickly, that’s the goal here.

Jordan Schneider: What’s your normative argument for why America should be hobbling domestic Chinese AI hardware production?

Chris McGuire: Number one, if you buy into the idea that AI is going to be one of the most important things in all elements of the economy and also for national security, then it’s an area where we need to maintain the largest possible lead as a fundamental principle. That’s the baseline here.

If they don’t have a domestic semiconductor ecosystem, then their influence over the entirety of the AI ecosystem is going to be inherently either reliant on us or next to zero.

If you think this is the thing that’s going to underpin the global economy and US technological supremacy and national power generally going forward in every single element and domain, then the single biggest risk you could take to US leadership is to allow the Chinese to make advanced chips. That’s the bottom line here.

Jordan Schneider: What’s the best answer you can give about how AI hardware matters for the military balance of power?

Chris McGuire: There are three big areas I’ll flag.

The first is backend logistics and decision making. It’s kind of boring, but the US armed forces is the world leader in backend logistics and decision making. The reason we’re able to get munitions on target anywhere in the world in 24 hours is partly because we have amazing capabilities, but it’s also that we’re good at logistics.

We’re able to position tankers around the world and they know exactly where right away. We have a lot of experience at this, and we do a lot of training and drills. If you can automate all that — that’s just one discrete example — that takes away a significant source of the US’s military advantage.

The second is cyber capabilities. A lot of people talk about AI plus cyber, but in the operational context, you can imagine how significant it would be if you have capabilities that can get around defenses easily and exploit vulnerabilities and put sophisticated malware into entities. That would allow you to do significant things that quickly change battlefield dynamics.

The third is autonomous systems, and this is where AI inference is really important. Having a good AI model that you put on a bunch of drone systems to autonomously work together and take actions in a comms-degraded environment on their own will change the battlefield. But you’re going to need massive inference capabilities to do that. The number of queries that will be needed, especially if it’s on the edge, you’re going to need all these systems to be processing these inference queries on the system. Or if it’s not in a comms-degraded environment, they’re all going to have to be going back home constantly, and that’s going to require very big inference clusters.

If we’re talking thousands or tens of thousands of drones, all of these are going to be constantly having inference-heavy requests on the AI models. You’re not only going to need a sophisticated model, but you’re going to need a lot of infrastructure to support the compute needs of your battlefield.

Those two are going to be reliant on having the hardware to make this model that’s super sophisticated and also be able to operationalize and run it in real time. The more capacity you have and the better those capabilities are, the more you’ll be able to do.

There are both military and commercial needs. If you assume that they prioritize military needs, then you could take a bite out of your commercial inference capacity in order to support that. True, but the more that you constrain this — and also as those compute needs are going to go up for the military capabilities — the more that will be a constraint going forward. That’s an area where not only do we not want American hardware supporting Chinese military processing capabilities, but we also shouldn’t want American hardware supporting the broader ecosystem that enables the Chinese to us foreign chips for commercial purposes and domestic chips to power the military purposes.

Not to say that China should be completely cut off — maybe there are ways to aggregate that — but even if China is completely reliant on US cloud, which is a separate debate that we could have, that’s something where in the event of a conflict you could shut that off right away and which imposes hard choices on the Chinese. Whereas if you export them the chips and they have a large supply of chips, then they can slice and dice for their military and commercial purposes.

Jordan Schneider: The other important normative question that I’m going to keep asking a lot on ChinaTalk is: to what extent do you think it is America’s responsibility to keep China down economically?

It’s a bit of a false question because as you said, Chris, if this is what you are most worried about let AWS sell access to Nvidia chips into China from data centers in Malaysia and you’ll figure out the latency. The visibility that the US has on what that’s being used for — whether it’s optimizing grocery logistics or optimizing PLA logistics — is something that you can look at. In the event of a conflict, then you don’t have this strategic resource that you are able to mobilize against American interests.

Given that semiconductors are dual-use technology, how do you address the argument that U.S. export controls are primarily about constraining China’s rise rather than legitimate security concerns?

Chris McGuire: I completely agree with your point that this is a false choice because there are ways to manage the competition such that we provide access — if that’s your choice — to the full American AI stack. By “full,” I mean US chips on US cloud, potentially running US models to the Chinese in ways that allow them to benefit economically, but give you the lever in a crisis. There’s a separate question on whether, given the dual-use nature, that’s a good idea. This is a hard dynamic because the policies that we’re taking have historical precedent.

The US has long controlled supercomputers to the Soviet Union. There were some efforts to collaborate, but they involved intrusive verification measures. That’s an area where we have always doubled down on compute processing.

The difference is that supercomputers are now available in a box off the shelf from Nvidia, AMD, and others. That’s the dynamic that we’re responding to: what do we do in that circumstance? Whereas previously they had to have them built at US national labs and it required all sorts of specialized expertise, now they don’t. This sophisticated technology that the United States has long guarded closely has become commercialized and commoditized, which is great for innovation but poses hard policy challenges with respect to this longstanding policy of preserving our edge on supercomputers.

The question there is: what do you want to preserve? Do you want to preserve a longstanding approach that maintaining our edge in compute is key to national competitiveness? Or do you want to argue that restricting commercial products is going to have deleterious impacts on our long-term vision for the global economy? The former outweighs the latter, and it means that there are costs to this. It probably means that we can’t have our cake and eat it too with respect to Chinese AI — or the Chinese can’t have it. If we take this approach, our ecosystems are going to be further and further apart and the onus is on them to indigenize. We are going to move our separate ways.

What we should do is find other ways to make sure that the Chinese can benefit from advances in, and even the use of, US models. The Chinese could use US models to support their companies, at least right now. I know Anthropic has moved to cut that off because they have national security concerns on that front. That is a frontier where once you have concerns there, it becomes difficult. But there are multiple hurdles that we could jump over before we have to say we’re completely separate — whether it’s cloud access or model access. Maybe that’s where we end up, but it’s certainly a false choice right now.

Jordan Schneider: What’s your take on the Silicon Shield argument — the idea that keeping China dependent on Taiwanese manufacturing is what’s stopping World War III?

Chris McGuire: The Chinese view Taiwan more in a historical context than through this economic and technological context. The Chinese know that a significant action vis-à-vis Taiwan would be flipping the game board.

There would be significant actions on either side, and the ability for them to operate as normal in that environment would be limited. No matter what, the Chinese recognize this. They’re looking more at what is their military capability and their readiness and what are the political dynamics and where’s the United States going to be, rather than what does this mean for our semiconductor production or our technology companies.

They know a move on Taiwan means they would incur substantial economic costs. The question is, are they willing to bear it? But I don’t think this factors nearly as much into their decision-making as the military balance of power and the overall geopolitics and whether or not they think this is something that they can implement and execute.

Jordan Schneider: The idea of cutting off BYD and Xiaomi from TSMC chips is what triggers an invasion does not make sense.

Chris McGuire: This is something that we’re going to have to grapple with. these are also scarce resources. 3-nanometer lines at TSMC are sold out and in very high demand. There are questions on whether it makes sense that we allow US tools to be used to make Xiaomi chips — Xiaomi three-nanometer chips at TSMC — when American companies would presumably use that fab capacity if they didn’t. You could have a complicated debate on this, but that’s a reasonable policy question.

Inevitably, as technology gets more important, these chips get more important and the fab capacity is not going to advance at the quantity that you need to support all the technological needs that we’re seeing, especially as you see growth in robotics and other fields that are dependent on AI. Are we going to continue to allow China to design their own chips on these lines for their own companies? It’s a separate question of whether they can have any of them. Certainly them having US chips is fundamentally better than them having custom-designed chips. But this is something that we’re going to grapple with in the next one to two years because it will be increasingly unsustainable.

Jordan Schneider: Chris, what do you want to tell the kids? You had a remarkable arc in the civil service over the past decade, but this is a tricky time for young people thinking about replicating the path you took. Any reflections you want to share or advice you’d want to give?

Chris McGuire: It’s cool that ten years ago I was in grad school, writing various papers and thinking about what to do in government. Now I talk with so many people who are in grad school writing papers about the things that we did — not only the specific policies, but also this entire area. This idea of technology competition is in vogue now, and a small number of people have pushed effort and policies in the last couple administrations to make this a new topic.

That’s an amazing experience, and you can’t have that anywhere else but in government. You can write things on the outside and work at companies, but actually being able to craft the policies that design the future of technology competition — there’s nothing like it. Anyone who is interested in this space should aspire to do that because we need people who care about it and also know the details. We need people who can translate both the technical details up and the bigger picture policy descriptions.

It’s a tricky moment for the civil service. I dealt with my share of good and also bad civil servants, so I recognize that there’s a wide spectrum of capacity there. But I do have big concerns about the government’s technical expertise, particularly on these topics — not export controls alone, but anything with respect to AI and semiconductors and future forms of computing, quantum computing, things like that.

It’s hard to get people into government who know this stuff and care about it and can connect the dots on policy. The government needs to prioritize getting those people and keeping those people. There’s always lip service to that, but it’s not happening. Those people are leaving.

These are very hard policies to craft and implement, but they’re also hard to maintain because regulating at the frontier means that you have to constantly be updating and innovating. If we’re going to have a technology policy that actively tries to preserve America’s edge via technology protection policies, you need to be maintaining that every day. If you let it atrophy, it’s like water — it will seep through the cracks and eventually fail. That requires people who know this and are good.

I hope that the government sees that and recognizes that and prioritizes bringing those people in. I know there are people coming in who are good, and it’s a matter of prioritizing those voices and listening to them from a technical perspective, to make sure that the right information is being briefed.

There’s no alternative place to do this massive policy stuff that is going to shape the future of all these industries. We need the people to be there to do it.

Jordan Schneider: Another lesson of your career, which I love your take and reflection on, is the continual learning aspect. You have an MPP, you spent two years at McKinsey, not Intel. But this episode illustrates that you’ve been able to push yourself to be at and stay on the knowledge frontier when it comes to AI and technology competition for almost a decade now. That energy and that determination to stay up on this stuff is not something that you see in every civil servant and is not something that the systems in the civil service are incentivizing for. What pushed you to spend that time learning all this? And what systemically do you think can be done to encourage people to stay on the knowledge frontier?

Chris McGuire: When I joined the civil service, I wasn’t doing emerging technology. I was doing nuclear weapons policy. I started doing nuclear arms control, which was an area I was interested in and had some historical connections to.

Jordan Schneider: What are your historical connections to nuclear weapons?

Chris McGuire: My grandfather was on the Atomic Bomb Casualty Commission, and he lived in Hiroshima from 1947 to 1949, studying the effects of the bomb. He was a pediatric hematologist, so he focused specifically on the effects on children and wrote some of the initial papers showing that nuclear weapon exposure leads to leukemia. He did the statistical analysis that demonstrated that proof. Growing up, I talked with him about that work.

Jordan Schneider: I love this lore.

Chris McGuire: Yeah. It’s a very complicated set of decisions around nuclear weapons use, but it became ingrained in me very early that this is important to US strategy, US power, and how we shape our view of the world. It became a topic that when I left the private sector and asked myself, “What do I actually care about? What do I want to work on?” — the strategic issues around nuclear weapons were the thing that pulled me.

I went to State. I actually had the US-Russia nuclear weapons portfolio, working on the New START Treaty and the INF Treaty. I oversaw the INF withdrawal when we discovered the Russians were cheating in 2018-2019. But it became apparent to me that this was last century’s strategic competition.

I made an active effort to pivot into that area from nuclear policy.

That background gave me the strategic logic and baseline. My job there was translating these highly technical policy measures to the Secretary of State or other principals in terms of why this matters and how it works. That’s the very same skill you need with respect to AI policy.

What was helpful to me was being entrepreneurial within the civil service. I was constantly seeking out the next opportunity to push myself forward, learn more, and move up. That’s not something that, at least in State Department civil service, is structurally encouraged. It’s more, you are in your job, you’re going to be the expert, and you’re going to be in that job for 20 years because we need an expert in this for 20 years. That’s not how the economy works anymore, and it’s not what young people want to do.

If you are in the civil service, you have to seek out those opportunities yourself. If you sit back, the default will be that you stay in place. I was lucky — being at the right place at the right time, one job leading to another. But if you’re entrepreneurial about it, you put yourself in position to get lucky. I would highly encourage anyone in that space to constantly be seeking new jobs, details, or opportunities. I definitely pushed the limits of the amount of time you could be detailed away from the State Department without working there. It was a joke for many people inside the department.

But I was always pursuing the goals of the department, the country, and the government. I was working for people who wanted me to stay, and I was always able to stay because the mission was important and we’re all ultimately on the same team in the government. Even if you have to ruffle a few feathers with various backend HR people who are frustrated — “detailed again?” — if the National Security Advisor wants you to be there, who cares what the deputy head of the HR office has to say? You have to manage all the relationships, but if you’re good and you’re wanted and you’re entrepreneurial, you can do interesting things.

Jordan Schneider: It’s really interesting comparing that missile gap analogy to the AI stuff. We had a conversation about various exponentials when it comes to AI hardware. I’ve spent a fair amount of time over the past few months listening to nuclear podcasts after all the news around what’s going to happen to the American nuclear umbrella and the rate at which the technology develops with the new launchers and missile sites and bombers or submarines.

Comparing that to a new Nvidia chip every year and four AI video models dropping this week — I’m sure it was blowing people’s minds in the ’40s and ’50s and ’60s and ’70s. There were ranges of outcomes and it was unclear who was going to be able to scale up production and deliver weapons systems. But the amount of dynamism you see with emerging technology versus the nuclear missile second-strike dance is apples and oranges in the 2020s.

Chris McGuire: It’s interesting to think back. There were some crazy ideas out there in the ’50s — let’s put nuclear reactors in everything. Let’s put them in cars, in airplanes. We’re going to use nuclear explosions to power spaceships with a giant lead shield behind the spaceship to propel it forward to Mars. People were saying, “Well, this would work,” but will the physics work in ways that don’t kill a ton of people? There was a crazy dynamic thinking in that space, but less manifested in the physical world. The manifestations in the physical world were slower but still significant in the ’40s, ’50s, and ’60s — not at today’s timescale.

With AI, we have yet to see many of the physical manifestations of these advancements. It’s still not real for people. But once we start to see more capability advancements in the robotic space and the coupling of that, it’s going to be real.

On your nuclear weapons point — this is a strategic issue. Our procurement timelines for nuclear delivery systems, which are super important and underpin our deterrence architecture, are 30 years. How confident are we that those systems are going to fill the need they fill today in 30 years, given advances in AI and technology? How confident are we that ballistic missile submarines are going to continue to be invulnerable second-strike capabilities in 30 years? Are they going to be undetectable still? I don’t know. It’s not that hard to imagine advances that would make it easier to detect those assets in ways you can’t right now. What does that mean for our strategic calculus? There are synergies here that are concerning. We should be thinking through these issues. There are people thinking about this, of course, but I question whether — given Pentagon procurement timelines and things — we’re going to be at the frontier of responding.

一个多月前,这个频道有期节目,分析中国这代年轻人是不是还有机会。节目播出后,不少听众留言,讲了自己的故事。有出身贫寒的农村女孩子,不懈努力,冲破难以想象的人生逆境,从农村到城市,从城市到海外;有工厂的打工仔,祖母曾是小企业家,父辈却被剥夺得一无所有,沦落到社会底层;他立志不重复父辈的生活,从零开始,奋斗到英国。也有正在默默上进的普通人家的孩子,还有当年坐小船逃离越南的华侨。这些故事一个比一个精彩。昨天选了10个故事,贴到社区,欢迎大家去读一读。每个听众讲的,都比我说的好。

有听众读了这些故事以后,评论说:“這些留言真的是用生活的經歷描繪的,有血有淚感動人心,卻也鼓勵大家,帶給人們希望。生命是一首歌,唱著唱著就過去了。 希望是上天給予的禮物,帶著希望前行,柳暗花明又一村。”

在那些故事中,有位听众特别谦逊,说自己是个“没出息的失败者”,“在路上放弃了”,“活成了一潭死水”。

我想对这位听众说,一个人是不是失败,是不是有出息,不是用努力的结果来衡量的,一个有清醒自我认知的人,不可能是失败者,不可能没出息。我节目中讲的没出息的人,是指那些虚荣、自大、自欺欺人、一脑袋强国鸦片的人。从你的故事中,看不到那种人的影子。

这位听众的故事,让我想到海明威的《老人与海》,一位孤苦伶仃的老渔民,开一只小船,去海上捕鱼,好不容易捕到一条大鱼,用小船拖着往回走,被一群鲨鱼盯上,把他捕到的那条鱼吃得只剩下一副骨头架子。他回到码头的时候,唯一的收获,就是精疲力尽,还有那副鱼骨架。

我从年轻的时候开始读这本书,现在偶尔还翻出来读几页。这不是个成功的故事,但这更不是个失败的故事。这个故事给人的激励和安慰,一点不比那些励志的成功故事少。它让我们看到的,是一个人在面对不可抗拒的命运时,所展现出的全部尊严。

每个人的境遇是不一样的,付出同样的努力,有的人得到了预想的结果,有的人得不到。有的人在这件事上得到了,但在另一件事上得不到。人生没有办法排除这种机缘偶合。这不是没出息,更不意味着失败。

诚实、勇敢地审视自己,剖析自己,是一种十分宝贵的品质。你对自己的处境不满意,觉得心灵受到煎熬,正是因为这种清醒的自我认知。你知道自己的困境,坦诚面对自己的弱点,没有把一切都推给外部环境,而是从自己身上找原因。这种自我反思需要很大的勇气。有这种勇气的人,很可能只是暂时被困住,但他们跟那些怨天尤人,在幻觉中自我麻痹的人,有着云泥之别。

昨天和朋友们一起去森林里采蘑菇,采到了一大捧一大捧的绣球菌,泡了一整晚又一早上,把木屑,泥土,和虫子泡出去,用紫皮洋葱,青椒和肉馅一起爆炒,放入醋和辣椒油以及蚝油生抽激发出很复合的香气和口感,在中秋节这一天吃上喷香酸辣滋味丰富的炒菌子。

正要大快朵颐前霸王花突然问说:“要是有毒怎么办?”

我看着已经冒着浓烈香气的绣球菌说:“那接下来就会有我们的生卒年记录——你卒于32岁,我卒于31岁。我甚至没看到32岁的太阳”。

庆幸的是,倘若你看到了这篇文章,说明我已经顺利来到了32岁!

绣球菌只给我带来了振奋的美味,没带来毁灭性灾难。不仅如此,我还在32岁当天,吃上了我发起的一餐盛宴:我魂牵梦绕了十几年的宜宾燃面和顶级伊比利亚猪肉(大厨获得promotion一定要请我们吃这个我们甚至没见过猪跑的猪肉)所做的水煮肉。

为什么会对宜宾燃面魂牵梦绕十几年呢?

因为在我刚从皖北的乡村老家去北京上大学,用上了手机电脑和网络时,有一副名叫[人为什么要努力]的漫画在彼时的互联网刷屏了,漫画主要情节是作者在一个极其悠闲,学校旁边就有一家特别好吃且便宜的宜宾燃面的城市读书,人不必努力也可以过上天天吃燃面的生活。有一次她和朋友骑车去几千米海拔的山上,被零下的温度,高原反应,和山间的雾气折磨得狼狈不堪,就痛恨地在想为什么要来山上骑行,为什么不留在山下晒太阳,吃燃面?

结果在一路的抱怨和悔之不及中大雾逐渐散去,蓝天,云海,和雪山逐渐露出清晰的面貌,王安石的“而世之奇伟、瑰怪,非常之观,常在于险远,而人之所罕至焉,故非有志者不能至也”也在她的脑中显现。这是她在山脚看不见的景色。

她也再次想到燃面,燃面固然好吃,但是人倘若满足于一个吃燃面的地方,那锅包肉和肉夹馍怎么办呢?

最后她说:人努力,是为了去看一个更大世界的欲望。

当时整个互联网都被这个漫画鼓舞了,包括我。

如今十几年过去,互联网经历过千万次新的热潮的刷屏,时代气候经历了一次又一次的变化,这篇漫画早已被遗忘,努力的叙事在如今这个时代也难再激励到谁。

可是我始终没忘记它。

我常常就想起它,因为我实在很好奇:锅包肉我吃过了,肉夹馍我也吃过了,可是宜宾燃面究竟是什么味呀!?它能有一个这么精彩的名字,所以到底味道多火爆呀?

我在北京的10年,一直也没有吃过宜宾燃面,因为我怕北京的燃面水平,使我幻灭。连带把我那个去看更大世界的渴望也一起随着北京的燃面水平熄灭。

但是在这十几年间,我去了一个又一个之于我而言更大的世界。大学暑假在去六安云山雾罩的大别山区时,我和同行的老师和朋友在山路十八弯的大巴上抱着大桶因为晕车疯狂呕吐,前一天在黄山为了省钱没有坐缆车,美丽的下山旅程使我们接下来在大别山的几天肌肉酸痛到下楼困难,每天只能用手搬着腿一步一步挪着下楼梯,每天只能坐在青旅看外面的山区风景。同样的痛苦在我去年凌晨坐大巴在秘鲁海拔三四千米晕车加高原反应协同发作时再次复现。这些游荡途中的痛苦时分,也是伴随着我默默背诵王安石的“而世之奇伟、瑰怪,非常之观,常在于险远……”度过的。

人是很神奇的动物,游荡中的苦很容易在体感上就忘却了,但是当时寓目的风景,心中的震撼,却不会忘记。身体的机制让我们好了伤疤忘记疼,却让美好的事物在头脑中难以磨灭,在心神间难以忘怀,成为造物主给我们的那个箱子里的宝贵礼物,很多时候打开箱子,擦亮那些礼物,就能用此点亮生活中很多晦暗的时分,让人对明天,对其它的地方产生新的渴望。

不过游荡经验多了,我也开始变得更聪明,能让自己少吃苦,多享福。

在去印尼的bromo火山时,看了网上的诸多攻略,全是凌晨三四点就要起床,坐车乌漆嘛黑的山间公路上山,和海量的人群一起竞争观看日出的位置,很容易就脚下踩空摔倒。这样的苦我决心不能吃,因此我另辟蹊径,不看日出去看日落,这样就能有充足的睡眠,不慌不忙的行程,不用和任何人竞争的紧迫,我们坐车看着一路山间的风景,云雾,树木和美丽的花朵,在黄昏时分没有任何其它游客的时候,以最佳的视角和线上直播的朋友们一起观看了云海和日落,还在美丽的云海间悠闲地吃了一碗美味的印尼泡面。

只要不和别人竞争拥挤的日出,你就能悠游纵享美丽的日落。

最近一年我更加发现,或许王安石诈骗,美丽之观,我推门就能看见。这世间的美丽风景,实在不必去又险又远的地方,只要你选对了地方,并且有一双发光发亮的眼睛,这些风景就可能在你家的房前屋后。在搬到新城市经历新的春夏,家附近的九重樱,屋后的独栋别墅区的美丽房子,每家每户种的和房子颜色精心搭配的植物花朵,家门口草坪的郁金香,旱水仙,西府海棠,风信子……每一天都让我想要惊叹自己是否活在天堂,而天堂并不遥远。

我在出门游荡之前总是听到一句在互联网流传的话:好山好水好无聊。我也以为这是每个人都会必经的逐渐归于疲倦的过程,我也有一天会经历。还有“全球旅行后对世界和旅行祛魅”叙事也总是屡见不鲜,我以为我也会这样。

但是我在荷兰居住了4年,全球游荡了好几年,“好山好水好无聊”的感受,一次也没找上我,荷兰甚至没山,而我家旁边的风景我也看不厌,只要是晴天我就想大声地赞叹。因为去的地方多了就对世界和旅行祛魅,更是无从发生。即使去了亚欧拉美北美非洲的很多地方,但是这个世界我还想一去再去,想去还没去的地方,还在我的谷歌地图里有着长长的wishlist.

这个互联网每天都有崭新的流行叙事,但是十几年前那个“去更大的世界看看的渴望”的叙事,时至今日还在捕获着我。

活到今时今日的32岁,我逐渐发现:人活着,就是活出自己本质的过程。

人会被某个流行叙事欺骗或者自我欺骗一阵子,但是骗不了太久。好山好水好无聊的人本质上压根就不爱山水,游荡了之后就对游荡旅行和世界统统祛魅并陷入疲倦空虚的人,压根也不爱游荡,更不爱世界。不爱就是一种本质,一种显而易见的本质。

不爱学习,不爱上班,不爱出门,不爱和人有瓜葛接触,它们都是本质。会在人生命水滴石穿的日日年年里水落石出:我压根不爱,我根本装不了。

越尊重自己本质中的不爱,人越可能发现本质中的爱。

前者是后者的前提条件。

我在今年更深地发现了自己的本质:我不爱上班,不爱在一个机构组织里做无意义不创造任何真正价值的事(越大的机构越可怕),不爱被动等待和接受安排,不爱纷繁芜杂的细节和数据,不能忍耐官僚主义。

我对它们的拒绝越充分,我才越能接近我本质中的爱。本质中的爱是我原发性的渴望,是一经唤醒就不再熄灭的火种。是无论流行叙事如何转变,我还能一直为之振奋,充满活力,或者念念不忘的东西。

我爱食物,爱自由,爱花草树木动物河流自然,爱出门游荡家门口的世界和其它或近或远的她乡,爱创造,爱主动发起,爱改变糟糕的、让人绝望的、并不合理的现状,爱阅读能带来aha moment,enlightment(顿悟)和insights的内容和新知,爱逛能带来极大审美享受的vintage店铺,博物馆和设计精妙的店铺建筑,爱分享和营销安利我发现的一切美好,爱找到一些顽固性问题的原因和解决方案,爱找到混乱复杂事物背后清晰的逻辑……

而能够拒绝不爱,和满足所爱,充分活出这“一体双面”的本质,需要人下很大的决心,需要想尽办法地拒绝不爱,才能为自己创造出空间,留出缝隙,来给那些可能尚未显露出来的爱的本质。

然而吊诡的是:那些不爱的本质,常常和生存相关。人想要拒绝这些不爱时,就会面临生存的危机。

而我回望我这两年所做的事情,我对自己充满了折服的敬意是:在我拒绝自己不爱的本质的时间里,我依然想方设法把自己从生存危机中解救了出来,并给自己创造了辽阔的空间让自己满足自己那些爱的本质。

这看起来的不可能三角,我却使之发生。

作为一个华人生活在荷兰,基本只能通过上学,上班,恋爱/结婚来获得每一年的合法居留,直到合法住满5年申请永居或者护照。

在中国上了五六年高强度的班我上得身心俱疲厌恶至极,在荷兰上了快一年work-life balance的班我才发现我不爱的是上班本身。可是不上班没工签就没有了合法居留,恋爱/婚姻更是我不爱的本质中的本质(比上班还不爱还要死命拒绝一万倍),而上正式学的学费如今极其高昂(动辄一年2万欧)。

但是我穷尽种种方法,探索出了绝无仅有(我自己本意也绝非如此,结果一步步最后我探索到了我都挺震惊)的方法,花两百多欧获得了一年多的合法签证,甚至还获得了1000多欧的极好的荷兰语课程学习的代金券(里外里我倒赚800多欧)。

而因此有了悠闲的时间和朋友们在各个美丽的地方相会欢聚,吃美味至极的食物,爬山、溯溪、观星、徒步、摘蘑菇、做饭吃饕餮的盛宴,且不花太多钱。也每天写作,阅读,广泛地学习自己想学习的东西,做单口,双人的,三人的播客,在不会编码的情况下创建起一个网站(www.boomlaodeng.nl),在游荡者平台(www.youdangzhe.com)为大家提供咨询(充满满足我想找到问题原因和解决方案的所爱)。

而倘若我不拒绝,我一直做着自己不爱做的事情,我不会有时间和生命活力去做如上这些我爱的事情。我会什么都不做就很疲累,疲倦,空虚,虚无,惫懒,对一切祛魅,同时又焦虑地无所适从。

有一位在爱丁堡的听友托霸王花问我:为什么荷兰比英国纬度更高更北,而你却充满了活力呢?

首先我想说的是其实荷兰没有比英国更北,两个国家基本就是隔着英吉利海峡同纬度对望,而英国国土面积比荷兰大得多,所以英国很多地方比荷兰更北。

当然这和纬度的相关性并不十分显著,根本的原因在于:我总是想方设法使之发生——拒绝不爱的事情,解决生存的危机,花时间和精力给所爱的一切。

借由此,我更加充分地活出了自己的本质。而活力,是活出了本质之后,随之而来的副产品。

甚至我还发现,我目光所及的绝大部分优秀的创作者,有灵魂地活着的所有人类,都完成了那个前提条件:拒绝那些不爱的本质。

她们中很多甚至比我更勇敢,因为她们即使获得穷困潦倒,捉襟见肘,被别人认为不稳定,不靠谱也在所不惜,也要拒绝。

而我尽量辗转腾挪,让自己不要陷入可怕的生存危机。当然还有很关键的原因是我爱自由,我必须想尽办法,给自己换取一个更加自由的身份。我可以清贫,但是不能非法居留,我要合法地存续到我更加自由的那一刻。

使之发生,就是要使所爱的本质发生。

所爱的东西,会经过十几年也不褪色。我十几年前想吃宜宾燃面,在我不忘却,还提起它之后,今年终于在荷兰结识了来自宜宾的朋友,通过我的营销和发起,因此在32岁生日的当天,终于吃到了它。

燃面是最燃的生日面。

此前我还通过想方设法的使之发生,使我想吃的云南火烧云油焖鸡发生,使上海生煎包发生,使新疆手抓饭发生,使螺蛳粉火锅发生,使大闸蟹发生,使干锅肥肠,肝腰合炒发生,使云南红三剁,贵州酸汤牛肉火锅发生,使我想吃的一切在美食荒漠的荷兰发生。

也让我这个十几年前从贫瘠乡村走出来女孩,把心灵鸡汤里的箴言“人努力是为了去看一个更大的世界的欲望”在自己活着的日常里发生。

所以被我渴望的东西,实在是得小心了,我或者一路直行,高歌猛进,或者日拱一卒,功不唐捐,或者拐弯抹角,围魏救赵,或者走走停停,等遇到合适的人就宣告,我总会使之发生。

Make it happen. Get it done. Sooner or later.

is a fellow at the Oxford China Policy Lab and an MSc student at the Oxford Internet Institute.

On September 11, the U.S. Federal Trade Commission launched an inquiry into seven tech companies that make AI chatbot companion products, including Meta, OpenAI, and Character AI, over concerns that AI chatbots may prompt users, “especially children and teens,” to trust them and form unhealthy dependencies.

Four days later, China published its AI Safety Governance Framework 2.0, explicitly listing “addiction and dependence on anthropomorphized interaction (拟人化交互的沉迷依赖)” among its top ethical risks, even above concerns about AI loss of control. Interestingly, directly following the addiction risk is the risk of “challenging existing social order (挑战现行社会秩序),” including traditional “views on childbirth (生育观).”

What makes AI chatbot interaction so concerning? Why is the U.S. more worried about child interaction, whereas the Chinese government views AI companions as a threat to family-making and childbearing? The answer lies in how different societies build different types of AI companions, which then create distinct societal risks. Drawing from an original market scan of 110 global AI companion platforms and analysis of China’s domestic market, I explore here shows how similar AI technologies produce vastly different companion experiences—American AI girlfriends versus Chinese AI boyfriends—when shaped by cultural values, regulatory frameworks, and geopolitical tensions.

In my team’s recent market scan of the 110 most popular AI companion platforms as of April 2025, we turned Similarweb and Sensor Tower upside down to gather data on traffic, company profiles, and user demographics. At the expense of one teammate developing an Excel sheet allergy and the shared trauma of viewing many NSFW images, we discovered that American AI girlfriends rule the roost in the global market for romantic AI companions: Over half (52%) of these AI companion companies are headquartered in the U.S., drastically ahead of China (10%) in the global market.1 These products are overwhelmingly designed around heterosexual male fantasies: another similar market report this year shows that 17% of all the active apps have “girlfriends” in names, compared to 4% of those with “boyfriends.”

We estimated that dating-themed AI chatbots, designed specifically for romantic or sexual bonding, capture roughly 29 million monthly active users (MAU) and 88 million monthly visits globally across platforms. For comparison, Bluesky has 23.2 million total users and 75.8 million monthly visits as of early 2025. And our estimation is very conservative: We did not count the traffic of platforms containing other kinds of companionships, such as Character AI, which offers AI tutors, pets, and friends, though we think many people go there to use AI boy/girlfriends. We did not count AI companion app downloads, which have reached 220 million since 2022. Nor did we include parasocial engagement with general-purpose AI like GPT-4o, which some people apparently have also fallen in love with.

Behind the explosive popularity of AI companions are two main engagement models. On one side are community-oriented platforms like Fam AI, where users create and share AI companions, such as customizable “girlfriends” in anime or photorealistic styles. These platforms thrive on user-generated content, offering adjustable body types, personalities, and voice/video modes to deepen connections. Users can create new AI characters with just a few paragraphs instructing the model how to act, similar to personalizing a copy of ChatGPT. Many of these platforms use affiliate programs — for example, craveu.ai pays users $120–180 for creating high-engagement characters. The abundance of options and the competition for attention encourage users to frequently switch between different AI companions, creating more transient digital relationships.

In contrast, product-oriented platforms like Replika offer a single evolving AI partners with deeper and longer emotional ties. On Replika’s subreddit, many users report using Replika for years, and some seriously consider themselves “bonded” and “married” to their Replika partner. People also grieve for the loss of their Replika when they sense a subtle personality change and suspect the system behind had reset their chatbots.

Despite differences in engagement style, both models seek to capitalize on sexuality to attract and retain users. The monetization of sexuality is done mainly through “freemium” models, offering a few free basic functions while charging for advanced features or additional services. Among the top ten most-visited AI companion platforms in our scan, 8 opt for freemium models, with only one currently free and one choosing advertising and in-app currency. Premium accounts typically offer unrestricted interaction and access to unblurred explicit images. They also allow the user to have longer conversations and improve memory capacity for previous conversations. Many mating companion platforms promote explicit ‘NSFW’ (not safe for work) companions, images, and roleplay features as part of the premium features.

On the other side of the Great Firewall, AI is also probing the emotional boundaries of humans. While the underlying LLMs may not differ drastically from their English-speaking counterparts, the fictional worlds and characters that users build around them are strikingly distinct.

One of the most notable contrasts lies in gender dynamics. In the Chinese AI companion market, male characters dominate: most trending products are marketed as AI boyfriends, and leading platforms prominently feature male characters on their main displays, while female characters occupy a more marginal space.

But looks are not everything that makes humans appealing–the same holds for AI characters. While many platforms still follow the community-oriented model where users create and share AI characters, apps like MiniMax’s Xingye (星野), Tencent-backed Zhumeng Dao (Dream-Building Island 筑梦岛), and Duxiang (独响), built by a startup, go beyond the basics. In addition to customizing AI companions’ personalities, users can generate themes, plots, and side stories, deepening immersion for themselves and others. Conversations are no longer limited to 1:1 exchanges: users can participate in group chats with multiple AI companions (1:N), and AI characters may even send messages to users when they are not using the app, similar to app notifications.

These AI companion products also draw insights from existing popular gaming cultures in China, such as card-drawing games that already have million-dollar markets. For example, Xingye allows users to generate 18 cartoon cards for one fictional character, adapting Japan’s popular gacha game mechanics and trading card culture for AI companions. In gacha games, players pay to randomly draw digital cards or characters, with rare editions commanding premium value. Chinese livestreamers have imported this model, streaming card draws on social media while viewers pay to test their luck for limited-edition collectibles tied to major intellectual properties. Similar to gacha games, AI-generated cards add an element of mystery and excitement when revealed. Users can also create and trade AI character photos on the platform, mimicking real-world card-collecting transactions.

The real monetary transactions occur through a combination of in-app currency and freemium models. Users purchase currency to buy cards and can upgrade to a monthly premium for more chances to generate AI cards, additional free in-app currency, and shorter wait times for conversations (a delay partly caused by limited compute capacity for Chinese LLMs). Card creators can also earn 2% of the revenue from the cards they sell.

Other AI companion companies also leverage users’ existing social behaviors. For instance, Duxiang’s AI WeChat Friend Circle allows AI partners to actively post on social media and interact with both users and other AI characters, mimicking real Chinese social media patterns. The company has even developed a wristband with Near-Field Communication (NFC) chips2 that connects to specific AI characters. When tapped on a phone, the AI character will appear on the screen to provide updates or show care, which builds physical connection in existing digital relationships.

You can also read ChinaTalk’s previous article to know more about other AI companion products and user experiences.