Tune for Performance: Do you really need a big internal SSD?

The most common economy that many make when specifying their next Mac is to opt for just 512 GB internal storage, and save the $/€/£600 or so it would cost to increase that to 2 TB. After all, if you can buy a 2 TB Thunderbolt 5 SSD for around two-thirds of the price, why pay more? And who needs the blistering speed of that expensive internal storage when your apps work perfectly well with a far cheaper SSD? This article considers whether that choice matters in terms of performance.

To look at this, I’m going to use the same compression task that I used in this previous article, my app Cormorant relying on the AppleArchive framework in macOS to do all the heavy lifting. As results are going to differ considerably when using other apps and other tasks, I’d like to make it clear that this can’t reach general conclusions that apply to every task and every app: your mileage will vary. My purpose here is to show how you can work out whether using a slower disk will affect your app’s performance.

Methods

In that previous article, I concluded that compression speed was unlikely to be determined by disk performance, as even with all 10 P cores running compression threads, that rate only reached 2.28 GB/s, around the same as write speed to a good Thunderbolt 3 SSD. For today’s tests, I therefore set Cormorant to use the default number of threads (all 14 on my M4 Pro) so it would run as fast as the CPU cores would allow when compressing my standard 15.517 GB IPSW test file.

I’m fortunate to have a range of SSDs to test, and here use the 2 TB internal SSD of my Mac mini M4 Pro, an external USB4 enclosure (OWC Express 1M2) with a 2 TB Samsung 990 Pro SSD, and an external 2 TB Thunderbolt 3 SSD (OWC Envoy Pro SX). As few are likely to have access to such a range, I included two disk images stored on the internal SSD, a 100 GB sparse bundle, and a 50 GB read-write disk image, to see if they could be used to model external storage. All tests used unencrypted APFS file systems.

The first step with each was to measure its write speed using Stibium. Unlike more popular benchmarking apps for the Mac, Stibium measures the speed across a wide range of file sizes, from 2 MB to 2 GB, providing more insight into performance. After those measurements, those test files were removed, the large test file copied to the volume, and compressed by Cormorant at high QoS with the default number of threads.

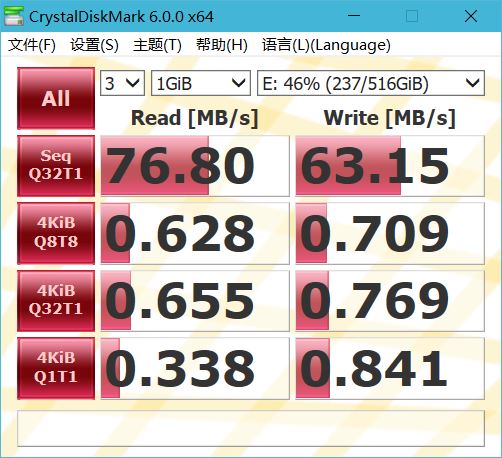

Disk write speeds

Results in each test followed a familiar pattern, with rapidly increasing write speeds to a peak at a file size of about 200 MB, then a steady rate or slow decline up to the 2 GB tested. The read-write disk image was a bit more erratic, though, with high write speeds at 800 and 1000 MB.

At 2 GB file size, write speeds were:

- 7.69 GB/s for the internal SSD

- 7.35 GB/s for the sparse bundle

- 3.61 GB/s for the USB4 SSD

- 2.26 GB/s for the Thunderbolt 3 SSD

- 1.35 GB/s for the disk image.

Those are in accord with my many previous measurements of write speeds for those types of storage.

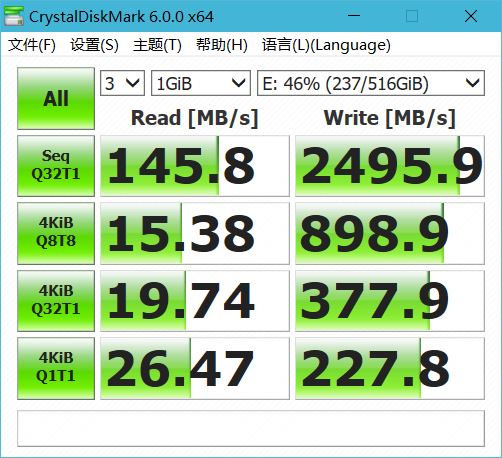

Compression rates

Times to compress the 15.517 GB test file ranked differently:

- 5.57 s for the internal SSD

- 5.84 s for the USB4 SSD

- 9.67 s for the sparse bundle

- 10.49 s for the Thunderbolt 3 SSD

- 16.87 s for the disk image.

When converted to compression rates, sparse bundle results are even more obviously an outlier, as shown in the chart below.

There’s a roughly linear relationship between measured write speed and compression rate in the disk image, Thunderbolt 3 SSD, and USB4 SSD, and little difference between the latter and the internal SSD. These suggest that disk write speed becomes the rate-limiting factor for compression when write speed falls below about 3 GB/s, but above that faster disks make little difference to compression rate.

Poor performance of the sparse bundle was a surprise, given how close its write speeds are to those of the host internal SSD. This is probably the result of compression writing a single very large file across its 14 threads; as the sparse bundle stores file data on a large number of band files, their overhead appears to have got the better of it. I will return to look at this in more detail in the near future, as sparse bundles have become popular largely because of their perceived superior performance.

The difference in compression rates between USB4 and Thunderbolt 3 SSDs is also surprisingly large. Of course, a Mac with fewer cores to run compression threads might not show any significant difference: a base M3 chip with 4 P and 4 E cores is unlikely to achieve a compression rate much in excess of 1.5 GB/s on its internal SSD because of its limited cores, so the restricted write speed of a Thunderbolt 3 SSD may not there become the rate-limiting factor.

Conclusions

- The rate-limiting step in task performance will change according to multiple factors, including the effective use of multiple threads on multiple cores, and disk performance.

- There’s no simple model you can apply to assess the effects of disk performance, and tests using disk images can be misleading.

- You can’t predict whether a task will be disk-bound from disk benchmark performance.

- Even expensive high-performance external SSDs can result in noticeably poor task performance. Maybe that money would be better spent on a larger internal SSD after all.